Introduction

- What are Knowledge Graphs?

Knowledge Graphs is a type of data model which represents data into a graphical structure which majorly consists of entities and their relationships. - Role of Large Language Models (LLMs):

LLMs can be used as tools to generate structured data from natural language through which we can construct knowledge graphs in an optimized fashion. - Purpose of the Blog:

Understanding the synergy between LLMs and knowledge graphs and practical applications.

Section 1: Understanding Knowledge Graphs

- Definition and Core Components:

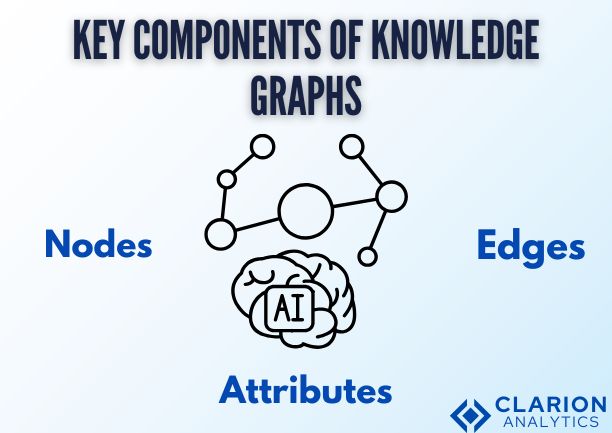

It’s a network of nodes which represents data points/objects(such as names,people,places ..etc) with their relationships between other entities / nodes.Attributes associated with nodes and edges provide additional information about the entities and their connections. - Core Components:

- Nodes: Represent individual entities or concepts.

- Edges: Connect nodes, indicating relationships between them.

- Attributes: Provide additional information about nodes and edges.

- Importance in Modern AI:

Knowledge graphs have become important in modern AI due to their ability to represent complex information in a structured and easily accessible way. - Challenges in Construction:

Building accurate and comprehensive knowledge graphs may go through some challenges when it comes to :- Scalability: the amount of data grows, managing and processing large-scale knowledge graphs can become computationally expensive.

- Unstructured data integration : Extracting meaningful information from unstructured data sources like text and images can be difficult.

- Manual curation difficulties: Ensuring the accuracy and completeness of knowledge graphs often requires significant manual effort, which can be time-consuming and expensive.

Section 2: Large Language Models (LLMs) Overview

- What Are LLMs?

Large Language Models (LLM’s) come under Generative Ai , which are a type of artificial intelligence algorithm that has been trained over a massive amount of text data by which it allows LLMs to generate human-like text, translate languages, write different kinds of creative content, and answer your questions in an informative way. Some prominent examples of LLMs include GPT models developed by OpenAI. - Key Features Relevant to Knowledge Graphs:

- Contextual understanding of language: LLMs don’t just read text; they understand it. By grasping the nuances of language and considering the surrounding context which help them interpret meaning .

- Semantic disambiguation: LLMs excel at choosing the right interpretation based on context. For example, the word “bank” could refer to a financial institution or the side of a river. By resolving such ambiguities, LLMs accurately recognise and extract information from them.

- Entity recognition and relationship extraction: LLMs can identify and classify entities (e.g., people, organizations, locations) within text and extract the relationships between them. This capability is essential for populating and enriching knowledge graphs.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Section 3:

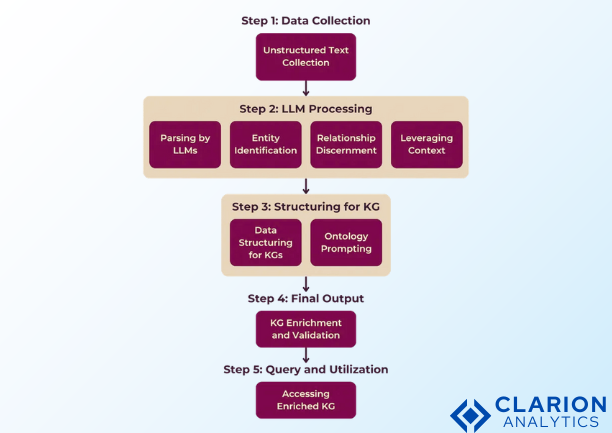

Data Processing with LLMs:

A knowledge graph represents an enormous network structure which binds together all human information. Using LLMs we can establish this network through the following abilities:

The ability of LLMs allows them to detect vital information within complex data combinations consisting of texts and audio and visual media elements.

The automated system performs both labeling operations and information linking which reduces the workloads of manual staff.

Key Techniques:

The text processing capability of Named Entity Recognition (NER) allows LLMs to recognize and sort essential entities that include people and organizations and geographic locations. The LLM detects two entities within this sentence “Narendra Modi is the Prime Minister of Bharath” by identifying “Narendra Modi” as an individual and “Bharath” as physical geography.

Through Relationship Extraction LLMs show the ability to understand how diverse information elements join together. The LLM would comprehend through this same sentence that “Narendra Modi” exists in a relationship “is” with “the Prime Minister of Bharath.”

Ontology Generation through LLMs allows users to develop structured systems (ontologies) that show concept and entity relationship validations. Such systems promote both consistency and clarity as a result of their knowledge graph organization.

Section 4: Best Practices for Using LLMs in Knowledge Graph Construction

- Start with clean data: Just like a house needs a strong foundation, your knowledge graph needs clean, accurate data. The better the data you feed your LLM, the better it will understand and the more accurate your graph will be. Think of it like training a dog – if you give it bad treats, it won’t learn good tricks!

- Teach your LLM the ropes: You can “fine-tune” your LLM by training it on specific data related to your knowledge graph. This is like giving it extra lessons to become an expert in a particular field. For example, if you’re building a graph about music, you can fine-tune it on a massive dataset of song lyrics, artist biographies, and music reviews. This will make it much better at understanding and extracting information about the music world.

- Don’t let the machines run wild: Even the smartest LLMs can sometimes make mistakes. That’s why it’s crucial to have humans review the work. Think of it as a quality control check. People can spot errors, correct biases, and make sure the information in your graph is accurate and trustworthy.

- Scale up your operation: Building a really large knowledge graph can require a lot of computing power. Cloud computing services like Google Cloud or Amazon Web Services can provide the resources you need to handle massive amounts of data and run your LLM models efficiently. You might also need to use distributed systems, which allow you to spread the workload across multiple computers, making the process faster and more manageable.

Section 5: Tools and Frameworks for LLM-Driven Knowledge Graphs

- Popular LLM Platforms:

OpenAI: These are the guys behind ChatGPT and GPT-4. They offer APIs that you can use to do all sorts of cool stuff, including pulling information to build knowledge graphs.

Hugging Face: This is more like a community and a platform. They have a huge library of pre-trained models that you can use, fine-tune, or even share. It’s a great resource for experimenting and finding the right model for your project.

Google’s offerings: Google has its own powerful LLMs like Gemini , Gemma (which powers Bard). They’re also heavily invested in this area and are constantly releasing new tools and models.

- Knowledge Graph Tools:

- Neo4j : It’s a popular graph database. It’s designed specifically for storing and querying graph data, so it’s really efficient at finding connections between things. Think of it as a specialized database built for relationships.

- GraphDB : Similar to Neo4j, GraphDB is another powerful graph database. It’s known for its scalability and support for semantic web standards (which are ways of making data on the web more understandable to computers).

- TigerGraph : This one is known for being really fast and able to handle huge amounts of data. It’s often used for large-scale graph analytics.

Section 6: Applications and Future Trends

This section outlines practical applications and emerging research directions for Knowledge Graphs (KGs) leveraging Large Language Models (LLMs).

Real-World Applications:

- E-commerce Personalization: KGs model product ontologies and user profiles (preferences, purchase history) to drive personalized recommendations and targeted marketing. LLMs enhance this by extracting product features and user sentiment from textual data (reviews, descriptions).

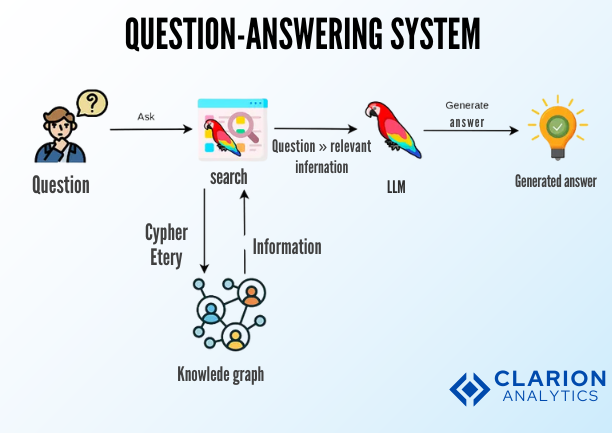

- Enhanced Search Engine Capabilities: KGs provide semantic context for search queries, enabling more accurate and comprehensive results. LLMs improve query understanding and information retrieval by processing natural language and identifying relevant entities and relationships within documents.

- Drug Discovery and Medical Research: KGs integrate diverse biomedical data (genes, diseases, drugs, pathways) to facilitate drug target identification, drug repurposing, and personalized medicine. LLMs aid in extracting knowledge from scientific literature and clinical trials, populating and enriching these KGs.

Emerging Trends:

By utilizing LLMs the process of keeping Knowledge Graphs up-to-date becomes automatic through continuous monitoring of data sources that change frequently including news reports and social media and scientific articles. The framework of LLMs enables live KG improvements and maintenance through its ability to extract new entities together with relationships and facts from dynamic data pools. By using LLMs programmers can tackle the problem of KG staleness.

Hybrid AI Models connect LLMs (sub-symbolic AI) to symbolic reasoning systems (rule-based and logic-based systems) in order to gain the advantages of their respective methods. By using this technique there is an effort to enhance reasoning abilities while managing inconsistent data patterns and delivering clear explanations. The process incorporates LLMs to create rules for knowledge bases and logical inference systems act as LLM output constraints.

The research field now focuses on KG construction from multilingual data sources through the development of techniques to address cross-lingual entity linkage and relations processing. Multilingual LLMs along with cross-lingual embeddings provide the capability to produce KGs that collect knowledge from diverse linguistic sources.

Conclusion

The advent of LLMs transformed knowledge graph (KG) construction so it turned from expert managerial work into a data automation format. The capability of LLMs to extract structured data from unorganized texts enables large-scale efficient processing of data while updating knowledge graphs dynamically to counter stagnation and achieving more detailed relationships that lead to cost and time savings. The partnership between human domain experts and KG tools merits the development of extensive accurate knowledge graphs which enhance their potential utility in various applications.

Also Read:

To fully unlock the potential of a Knowledge Graph, it’s essential to begin with Large Language Models 101: NLP Fundamentals for Smarter AI Solutions, which lays the groundwork for understanding how modern LLMs process and structure language. Building on this foundation, LLM 101: Mastering Word Embeddings for Revolutionary NLP explains how semantic relationships are captured—critical for entity linking and graph construction. Mastering Transformers: Unlock the Magic of LLM Coding 101 further explores the architecture enabling contextual reasoning, while Llama Fine-Tuning: Your Step-by-Step Guide demonstrates how models can be adapted for domain-specific knowledge extraction. To automate and scale graph updates, Autonomous Agents Accelerated: Smarter AI with the Model Context Protocol provides insight into intelligent orchestration, and Mastering Mitigation of LLM Hallucination: Critical Risks and Proven Prevention Strategies ensures accuracy and reliability when generating structured data for a robust Knowledge Graph.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us