The fast developing world now depends heavily on computer vision technology which transforms operations in multiple sectors. Computer vision models through self-driving cars and medical tests and face recognition for industrial quality checks have transformed machine perception and operation methods. These strong models perform reliably because they achieve their function correctly under multiple circumstances and data scenarios.

Computer vision model development requires two fundamental strategies which involve both data augmentation and bias reduction. The two key strategies function as fundamental approaches to handle core challenges that appear in machine learning and Artificial Intelligence. The process of data augmentation serves as a helpful way to enhance both diversity and quantity of training datasets. The technique enables models to acquire optimal properties for handling fresh input data. The expansion of training data through various manipulations solves issues arising from insufficient diversity in training materials. Such problems usually appear during practical applications of machine learning technologies. Both problems stem from the need to minimize the biases that AI systems display.

Computer vision models make vital decisions in healthcare and law enforcement fields which justifies their serious influence on important decision-making processes. The process needs both high-level accuracy and effective bias control. AI systems with biased design produce unethical decisions which diminish people’s faith in artificial intelligence. Addressing bias provides two essential benefits which are maintaining ethical standards while also ensuring algorithmic models deliver consistent performance among all targeted groups and scenarios.

Developers of ethical high-performance AI systems must master and deploy these techniques during their development process. Data augmentation provides two benefits which enhance model accuracy and allow better performance across diverse scenarios. The bias reduction methods maintain fairness in system operations by treating every individual the same. Development of strong dependable computer vision systems becomes possible when developers perfect these approach methods.

This guide will give a full picture of good ways to build strong computer vision models. It focuses on ways to make data better and reduce bias. We’ll look at the basics of computer vision, check out different ways to improve data, talk about the problem of uneven data, and discuss how to find and lessen bias in AI systems. We’ll use real-world examples and case studies to show how these ideas work in practice.

After reading this guide, you’ll have a good grasp of :

- How strong computer vision models need to be

- Different ways to change data and how they’re used

- How to deal with uneven data in deep learning

- Ways to spot and fix bias in AI systems

- The best ways to put these ideas into action in real life

Introduction to Computer Vision Models

The fast developing world now depends heavily on computer vision technology which transforms operations in multiple sectors. Computer vision models through self-driving cars and medical tests along with face recognition and quality checks in factories are revolutionizing machine perception and operations of visual information. These strong models perform reliably because they achieve their function correctly under multiple circumstances and data scenarios. Creating powerful computer vision models requires two essential approaches which consist of data expansion and bias reduction.

Technical tricks are insufficient because the main problems in machine learning and AI are solved by these standard solution methods. Using data augmentation provides a feasible technique for expanding the amount and diversity of available training data. The technique enables models to acquire optimal properties for handling fresh input data. The expansion of training data through various manipulations solves issues arising from insufficient diversity in training materials.

Such problems usually appear during practical applications of machine learning technologies. Both problems stem from the need to minimize the biases that AI systems display. Computer vision models make vital decisions in healthcare and law enforcement fields which justifies their serious influence on important decision-making processes. The process needs both high-level accuracy and effective bias control. Unfair decisions arising from AI biases result in erosion of public trust towards these technological solutions. The path to model performance requires dealing with bias since it enables systems with better versatility when applied to different populations and scenarios.

Developers of ethical high-performance AI systems must master and deploy these techniques during their development process. The use of data augmentation improves both model accuracy levels along with the system’s ability to perform in various contexts. The bias reduction methods maintain fairness in system operations by treating every individual the same. Development of strong dependable computer vision systems becomes possible when developers perfect these approach methods.

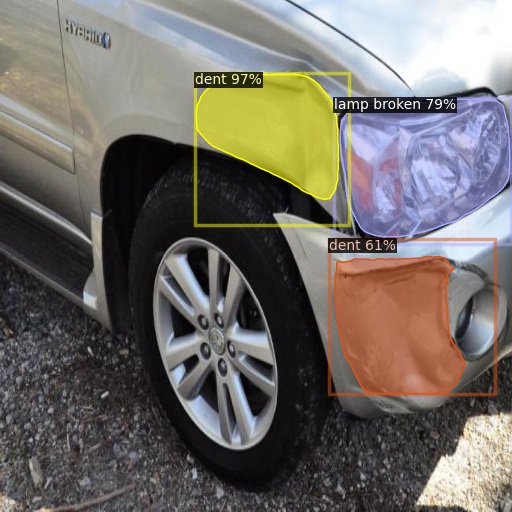

Car damage detection and segmentation are performed by a Computer Vision model.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Understanding Data Augmentation

The fast developing world now depends heavily on computer vision technology which transforms operations in multiple sectors. Computer vision models through self-driving cars and medical tests and face recognition and factory quality checks transform machine understanding and operations of visual data. These strong models perform reliably because they achieve their function correctly under multiple circumstances and data scenarios.

Strong computer vision models require two essential strategies that involve expanding data sets and minimizing data biases. Technical tricks appear to be basic solutions that handle fundamental issues in machine learning and artificial intelligence. Data augmentation stands as a vital method which produces larger datasets while enhancing data variety. The technique enables models to acquire optimal properties for handling fresh input data.

The expansion of training data through various manipulations solves issues arising from insufficient diversity in training materials. Such problems usually appear during practical applications of machine learning technologies. Both problems stem from the need to minimize the biases that AI systems display.

Computer vision models make vital decisions in healthcare and law enforcement fields which justifies their serious influence on important decision-making processes. The process needs both high-level accuracy and effective bias control. Any form of bias in Artificial Intelligence systems can produce unjustified outcomes which harms public trust in these technologies.

Tackling bias serves as both an ethical necessity and a requirement to achieve consistent results from models across diverse situations and population groups. Developers of ethical high-performance AI systems must master and deploy these techniques during their development process. Through data augmentation methods models both perform better and achieve higher accuracy levels when dealing with various scenarios. The bias reduction methods maintain fairness in system operations by treating every individual the same. Development of strong dependable computer vision systems becomes possible when developers perfect these approach methods.

Common Data Augmentation Techniques

9 different augmentation techniques applied to 1 single image

There are many ways to perform data augmentations and there are many more functions that can be used to achieve augmentation. We can classify them in the following ways :

- Basic Techniques:

- Flipping: Horizontal or vertical mirroring of images. This is particularly useful for objects that can appear in different orientations.

- Rotating: Applying various degrees of rotation to images. This helps models recognize objects at different angles.

- Cropping: Randomly cropping portions of the image. This technique can help the model focus on different parts of the object and be more robust to partial occlusions.

- Color jittering: Adjusting brightness, contrast, saturation, or hue. This helps models become invariant to lighting conditions and color variations.

- Scaling: Resizing images up or down. This teaches the model to recognize objects at different scales.

- Translation: Shifting the image in various directions. This helps with positional invariance.

- Advanced Techniques:

- Mix up: Combining two images by taking a weighted average. This technique helps the model learn smoother decision boundaries between classes.

- Cut Mix: Replacing a rectangular region in one image with a patch from another image. This combines the benefits of mixup and random erasing.

- Random erasing: Randomly selecting rectangular regions in the image and replacing them with random values. This simulates occlusions and helps the model be more robust.

- Elastic distortions: Applying non-linear transformations to simulate the variability of handwritten text or biological structures.

- GANs for augmentation: Using Generative Adversarial Networks to create entirely new, synthetic images that maintain the characteristics of the training set.

- Synthetic Data Generation:

- 3D modeling: Creating 3D models of objects and rendering them from various angles, with different lighting conditions and backgrounds.

- Simulations: Using game engines or specialized simulation software to generate realistic scenarios, particularly useful for autonomous driving or robotics applications.

- Style transfer: Applying the style of one image to the content of another, useful for creating variations in texture and appearance.

- Physics-based rendering: Using physics principles to generate realistic images, especially valuable for scientific and medical imaging applications.

Addressing Data Imbalance in Deep Learning

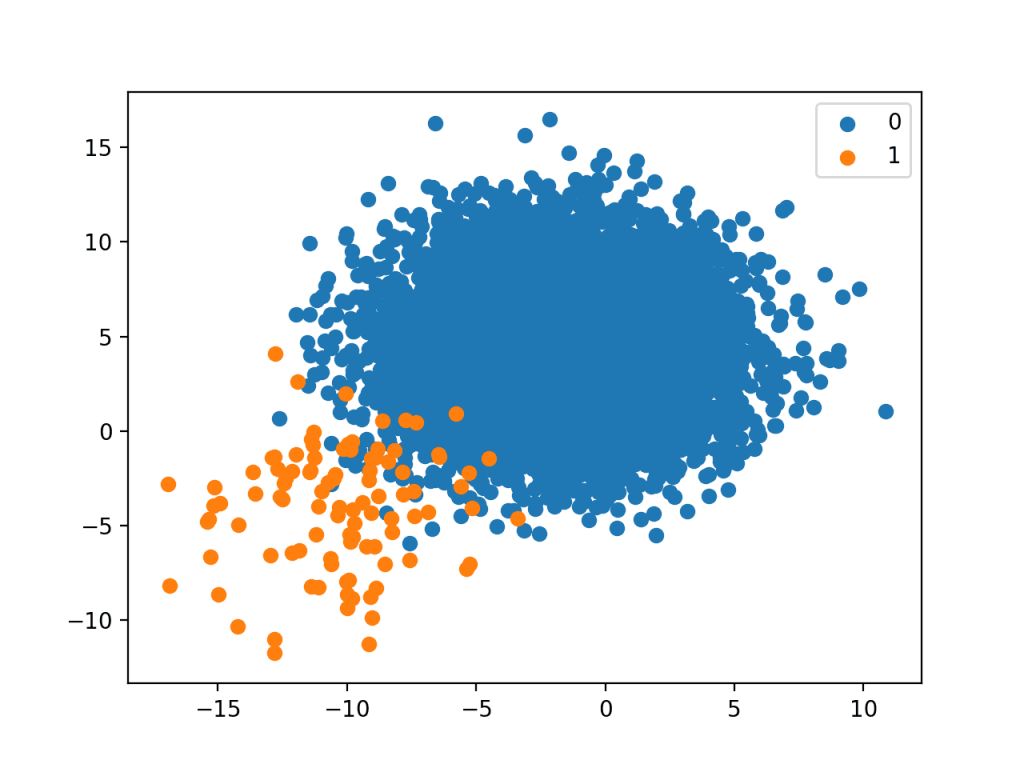

The class imbalance problem is a well known problem in different types of machine learning tasks in reality, one of them being computer vision tasks such as the medical image classification and the detection of anomalies. It is the non-uniform distribution of class examples resulting in the fact that some classes have very few examples while others have a large number which may lead to inaccurate computer vision models.

The effects of data imbalance on model performance can be huge:

- Bias towards major class: The model can overfit the most frequent class, this can cause the model to learn many correct examples which results in bad performance on the rest.

- Misleading evaluation metrics: Total accuracy can give a wrong impression when classes are imbalanced because a high accuracy rate can be obtained just by predicting the majority class.

- Lack of generality: The model will simply not be able to learn all underrepresented classes’ important features, therefore it will perform poorly if it gets new and unseen data.

To solve above these kinds of issues, the following methods can be implemented:

- Oversampling:

- Random Oversampling: Randomly replicate instances from the minority classes.

- Synthetic Minority Over-sampling Technique (SMOTE): Create synthetic examples by interpolation between already existent minority class samples.

- Adaptive Synthetic (ADASYN): This is SMOTE but focuses on the creation of artificial harder to learn examples.

- Under sampling:

- Random Under sampling: Randomly remove the most ones from the majority class.

- Tomek Links: Remove majority class examples which form Tomek Links with minority class.

- Cluster Centroids: By clustering, reduce the majority class to the desired number of examples for each class.

- Combination Methods:

- SMOTEENN: SMOTE will be used for oversampling and editing nearest neighbors for cleaning.

- SMOTE Tomek: Apply SMOTE for oversampling and Tomek links for under sampling.

- Cost-sensitive Learning:

- Adjust the loss function to assign higher costs to misclassifications of minority classes.

- This can be implemented through class weighting in many deep learning frameworks.

- Ensemble Methods:

- Balanced Random Forest: Randomly remove subsets of the majority class and train the random forest classifiers.

- Easy Ensemble: Use bootstrap aggregating algorithm with AdaBoost to up sampling specific segments of the data.

- Data Augmentation for Minority Classes:

- Increase level of augmentation to the already limited classes and thus provide them more contributors.

When addressing data imbalance, it is important to:

- Select reference evaluation metrics to find a better point of view in performance across the class such as F1-score, precision-recall curves, or area under the ROC curve

- Utilize stratified sampling in cross-validation to preserve the proportion of the classes from the training set to the Validation set.

- Your choice of technique should match your challenge and dataset. In this regard, some approaches may be useful only in specific data sets or imbalance ratios.

- Bear in mind the potential bias introduction or overfitting when applying these methods.

Through effective data imbalance management, you can considerably enhance the accuracy and precision of your computer vision models, especially in situations where the precise classification of minority classes is mandatory.

Data Imbalance visualization showing minority and majority classes of a dataset

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Strategies for Mitigating Bias in AI

Bias in AI models, especially in computer vision schemes, may bring about prejudice or unfairness. Balancing bias is important for the performance of an AI model.

Now let’s see ways to find and eliminate bias from AI models and then talk about methods for evaluating AI models.

First, let’s turn our attention to the types of bias that can be detected:

- Training Data Bias:

- An example could be when there are fewer or more people from certain representation groups than what one would expect.

- Historical biases reflected in the data

- Labeling biases introduced by human annotators

- Algorithmic Bias:

- The model’s architecture was decided in such a way that, say, some patterns are always going to be chosen.

- Feature selection that by chance indirectly emphasizes features with biased attributions

- Deployment Bias:

- The lack of compatibility between the training and real-world environments.

- Changing societal norms and demographics over time

Now let’s look at techniques to mitigate bias:

- Diverse and Representative Data Collection:

- One way to mitigate bias is by collecting data that includes a wide range of different scenarios and different individuals.

- Techniques like stratified sampling can be used to maintain the required percentage of subjects in each of the groups

- Data Augmentation for Underrepresented Class:

- An augmentation process can be done to increase the number of minority classes that are represented through a targeted augmentation of the underrepresented class.

- A generative model can help to generate data which then can be regarded as synthetic data for the underrepresented classes.

- Bias-aware Training:

- Accuracy of the learning process can be enhanced by maximizing fairness constraints in the learning algorithm.

- Reinforcement of the AI model as per the adversarial debiasing technique to remove sensitive information from learned representations.

- Regular Bias Audits:

- Complete in-depth testing of different demographic categories to guarantee that there are no hidden biases in the system by using the intersectional analysis to analyze the data and identify any sub categories that may be affected by biases in those categories.

- Interpretability

- Alternate to everything, SHAP or LIME can be used to make sure that everything is in a fair way.

- The use of attention mechanisms, which are lightweight and portable, allows for efficient implementation and improved interpretability.

- Diverse Development Teams:

- Include individuals from various backgrounds in the development process.

- Start by adopting the idea of diverse perspectives in problem framing, and solution design.

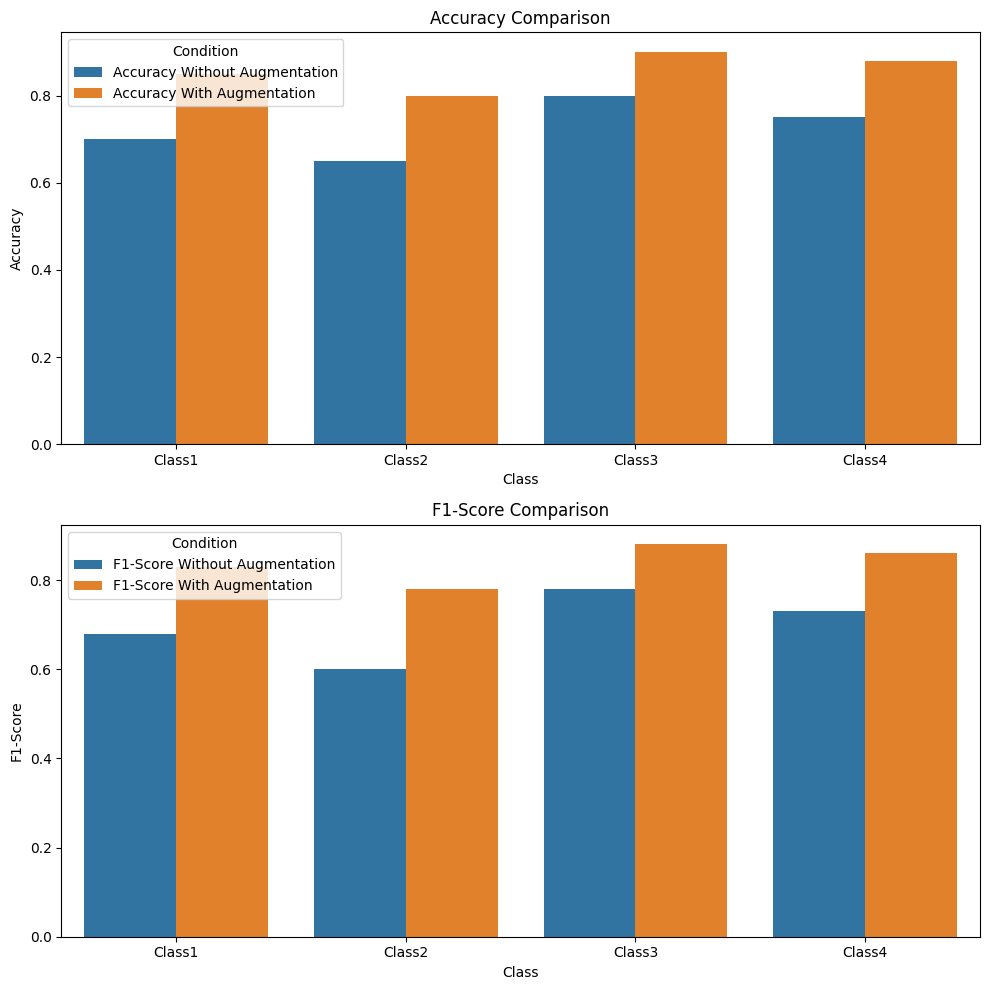

how bias is addressed with and without data augmentation.

Evaluating Model Fairness:

- Known as demographic parity, it ensures the model’s predictions are independent of protected attributes

- While that is true, we should look into the technical details of the model: whether all the true positive rates are similar, or, e.g., some of them are better than others.

- Equalized Opportunity refers to a concept according to which both the true positive rate and the false positive rate should be equal across all the groups.

- Individual fairness can be a strategy to avoid unwanted discrimination by the model. The general idea is to treat similarly situated individuals equally.

- This issue can be listened to stratified sampling which will be applied to demographic proportions in both the training and testing sets of a model.

- Cross-validation technique with a focus on the fairness of the protected attributes.

Implementing these strategies requires ongoing effort and vigilance. It’s important to:

- The further development and updating of the methods that counteract biases ought to be done as a new piece of data is obtained and societal norms evolve.

- Involve diverse stakeholders, including ethicists and community representatives, in the development and deployment process.

- Be transparent about the limitations and potential biases of the model, and provide clear guidelines for its appropriate use.

- AI fairness and bias mitigation are still in a state of rapid transformation. Always remain updated on the newest regulations and standards of practice in the field of AI.

Proactive intervention in the behaviors of AI that can cause bias will allow users to construct inclusive and trustworthy devices that will benefit people and disallow contradictions and problems in society.

Real-World Applications And Case Studies

To illustrate the practical implementation and impact of robust computer vision models, let’s explore some real-world applications and case studies that highlight the use of data augmentation and bias mitigation techniques.

- Medical Imaging Diagnosis:

Application: A deep learning model for the early diagnosis of skin cancer from dermatological pictures.

Challenge: The limited number of datasets with the disproportion of skin disorders and scarce cancer varieties.

Solution: We can conduct extensive data justification that can include color jittering which is used to simulate targeted lighting conditions as well as varying skin tones.

SMOTE methodology can be used to synthesize cases of underrepresented cancer.

We can also utilize transfer learning from a pre-trained model with different skin diseases datasets. - Autonomous Vehicle Perception:

Application: A computerized vision system for cars to detect pedestrians and obstacles in self-driven cars.

Challenge: To ensure a constant, reliable performance amidst varying weather conditions, times of day and demographic groups.

Solution: We can use synthetic data generation techniques to generate a variety of scenarios with different weather conditions as a factor. We can also carry out targeted data augmentation for the further inclusion of pedestrian groups that could be an under-represented class. We can also perform abundant bias audits and fairness evaluations in various groups and conditions. - Facial Recognition for Security Systems:

Application: Face recognition system for access control in a multinational corporation.

Challenge: System’s bias in respect of different various race groups, and ensure privacy compliance.

Solution: Once again we can go over the general representation of the world population due to the fact that we can make a dataset which can comprise quite a good deal of diverse people from all kinds of ethnicity. - Quality Control in Manufacturing:

Application: Automated fault detection for semiconductor formation.

Problem: The main issue will be dealing with the probabilities of one event occurring while the other has a very close to zero probability. This is a very imbalanced data set since defects occur rarely in high-quality manufacturing processes

Solution: Two combos of oversampling techniques can be used to generate more defect images (which acted as a class to be identified). Class imbalance can be handled by adapting an algorithm that penalizes misclassification errors in the minority class by training on a balanced sample space that is artificially generated from the original data.

These case studies demonstrate the real-world impact of robust computer vision models and the importance of addressing challenges like data imbalance and bias. They also highlight how different techniques can be combined and tailored to specific application needs.

Best Practices for Implementation

Combining data augmentation and bias mitigation into your computer vision pipeline requires careful planning and executing it. Here are some best practices to consider:

- Start Early:

- If you incorporate data augmentation and bias considerations from the very beginning of your project, not as an afterthought your project will have a higher chance of success.

- Develop a diverse and representative data collection process.

- Understand Your Data:

- Do a comprehensive exploratory data analysis so as to understand the features and defects of your dataset.

- Identify any potential trouble areas when it comes to difference and imbalance even before you launch the model.

- Choose Appropriate Techniques:

- Select data augmentation methods that are relevant to your specific problem and domain.

- Consider multiple ways to increase the strength of your results avoiding of course the issues of non-realistic data.

- Validate Augmented Data:

- On a regular basis check the augmented samples to make sure they stay authentic and relevant.

- Get an expert who is professional in the domain to review and consider their inputs for sensitive applications.

- Monitor and Iterate:

- After multiple epochs of training, you can judge where the system is working and where it is not by saving the model checkpoints and testing it on validation dataset.

- Be ready to revise your augmentation and bias mitigation approaches during the process of gathering more data and insights.

- Use Appropriate Metrics:

- Choose evaluation metrics that are sensitive to class imbalance and fairness considerations.

- Look beyond aggregate metrics to understand performance across different groups and edge cases.

- Implement Interpretability:

- The usage of model interpretability tools will help you see how your model is making decisions.

- Documentation and Transparency:

- Maintain detailed documentation of your dataset, including its limitations and any augmentation techniques used.

- Stay Informed:

- Keep up with the latest research and best practices in data augmentation and AI ethics.

Tools for Implementation:

- Data Augmentation Libraries: Albumentations, imgaug, Keras ImageDataGenerator

- Bias Detection and Mitigation: AI Fairness 360 (IBM), Fairlearn (Microsoft)

- Interpretability Tools: SHAP (SHapley Additive exPlanations), LIME (Local Interpretable Model-agnostic Explanations)

By following these best practices and utilizing appropriate tools, you can develop more robust, fair, and reliable computer vision models that perform well in real-world scenarios.

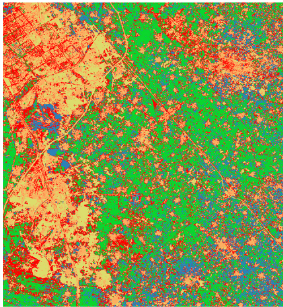

Land classification performed by using a Computer Vision model that helps in classifying areas of a satellite image into categories

Conclusion

It is a difficult but essential task to build durable AI systems in the current rapidly growing world. This information should have been a useful tool that has illustrated how data augmentation and bias mitigation are crucial to increasing the model’s performance while also ensuring that justice is met and building trust in AI systems.

Also Read:

The evolution of visual intelligence is best understood through The History of Computer Vision Models: From Pixels to Perception, which lays the foundation for modern vision systems. As models grow more sophisticated, Object Detection Beyond Classification: Exploring Advanced Deep Learning Techniques highlights how vision tasks extend far beyond simple labeling, while Mastering YOLO: Proven Steps to Train, Validate, Deploy, and Optimize offers practical insights into building high-performance, real-time detection systems. At a more granular level, Unlocking the Future of Semantic Segmentation: Breakthrough Trends and Techniques explores pixel-level understanding critical for accuracy and robustness. Complementing these advancements, The Power of Explainable AI (XAI) in Deep Learning: Demystifying Decision-Making Processes emphasizes transparency and trust in computer vision models.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us