Introduction

The continuous development of artificial intelligence has brought forth the important creation of Vision-Language Models which allow systems to process images and text simultaneously. Multiple innovative applications that combine image captioning with visual question answering receive their foundation from these models which effectively modify how machines exchange information. The comprehensive guide investigates Vision-Language Models including their details along with developmental stages and practical applications and obstacles and prognostications for the future.

What are Vision-Language Models?

The artificial intelligence category named Vision-Language Models combines computational systems which understand visual data and written information for processing purposes. The purpose of these models is to unite computer vision (CV) and natural language processing (NLP) systems so machines can derive valuable understanding from images and videos and text descriptions at once. The broad range of tasks depends on Vision-Language Models which includes image captioning and visual question answering and multimodal search among others.

Importance of Vision-Language Models in Real-World Applications

The real-world value of Vision-Language Models should not be underestimated. They drive applications across multiple fields, including healthcare (e.g., disease diagnosis from images and reports), retail (e.g., visual search for items), autonomous driving (e.g., reading road signs and directions), and entertainment (e.g., content generation based on user input). Through their ability to understand both vision and language, VLMs make more natural, human-like interactions with AI more prevalent, enhancing user experience across sectors.

Challenges in Building Vision-Language Models

Building Vision-Language Models involves a number of challenges because of the intricacies involved in unifying two highly disparate forms of data. In contrast to classical models that deal with text or images separately, Vision-Language Models have to deal with the inherent disparity in these modalities such as their different representations, semantics, and vagueness. Challenges are:

Multimodal Representation: It is not easy to appropriately blend visual and textual representations since images and text-encode different types of information.

Data Alignment: Aligning textual and visual data at the appropriate granularity is essential, and tends to need large-annotated datasets, which are costly and labor-intensive to generate.

Scalability: Large multimodal datasets require large computational resources and optimization methods to train Vision-Language Models at scale.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

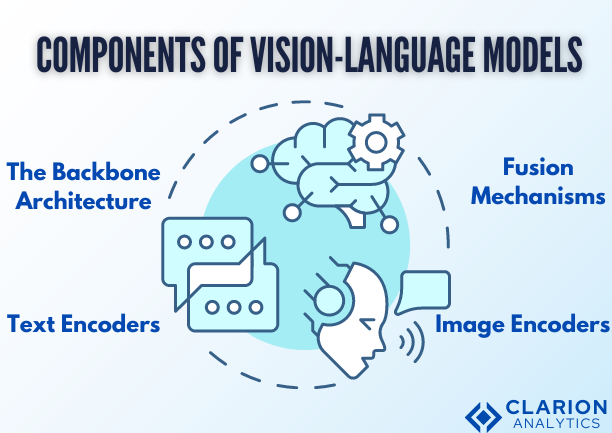

Understanding the Components of Vision-Language Models

VLMs consist of several critical components, each playing a key role in extracting and integrating visual and textual information.

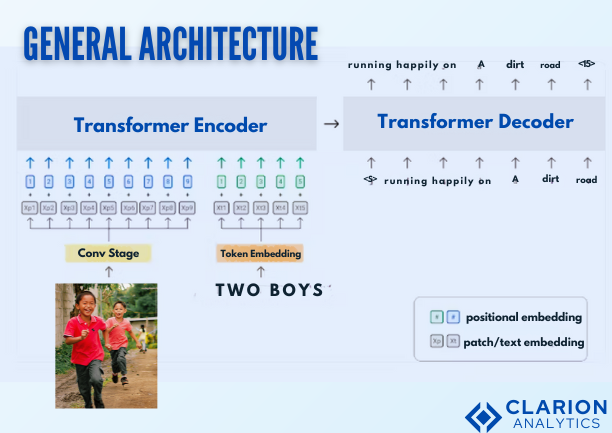

The Backbone Architecture

A Vision-Language Model requires backbone components from various advanced structures to process images by CNNs and textual content through transformers. The backbone system transforms sent data through processing and encoding into meaningful expressions that downstream operations require.

Feature extraction within images depends on CNNs which identify objects and textures and patterns in visual data. ResNet and EfficientNet act as standard backbone models which Vision-Language Models frequently employ for their visual processing needs.

BERT and GPT transformers gain wide popularity as tools for text processing tasks. Code models have a unique ability to analyze linguistic relationships in words while creating elaborate text vector rankings.

Image Encoders

A central role in image processing belongs to the image encoder since it transforms unprocessed pixel data points into feature vectors. During the process the model concentrates on vital image components while discarding the analysis of every single pixel. The common practice for visual embedding creation either employs pre-trained CNNs or vision transformers (ViTs).

Text Encoders

Text encoders are tasked with converting text into dense vector representations that hold the semantic meaning of words or phrases. Transformers, such as BERT and GPT, are popular because they can accommodate the sequential nature of text and capture contextual relationships.

Sentence Transformers: Variants of the classical transformers that encode whole sentences instead of a single word, enabling them to better align with visual data when they carry out multimodal tasks.

Fusion Mechanisms

The fusion process in Vision-Language Models handles the combination of image and text information in meaningful and understandable ways. A number of strategies can be employed, such as:

Concatenation: Straightforward method where image and text embeddings are concatenated and fed through fully connected layers.

Cross-Attention: More advanced methods involve cross-attention mechanisms, in which the model learns to attend to parts of interest in the image when it processes text, and vice versa.

Late Fusion: In other instances, the model handles visual and textual information independently and combines their outputs at a later point, making independent decisions for each modality.

Key Applications of Vision-Language Models

The application of nevron-Language Models now shapes our daily contact with machine intelligence through a wide range of domains in both image and textual environments.

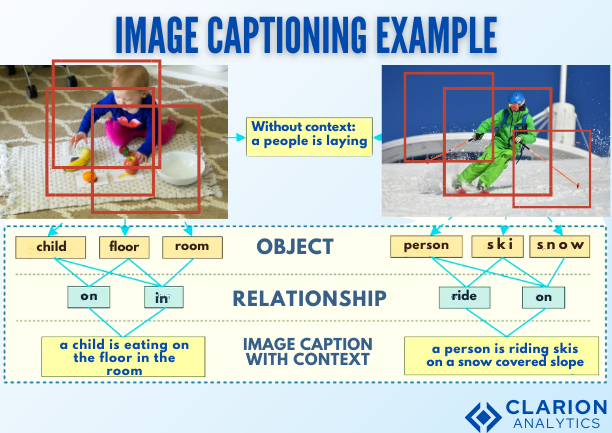

Image Captioning

The image captioning task requires systems to produce descriptive wording from visual input. The model faces exceptional difficulty when processing images since it must identify objects while understanding how various elements in the context relate to each other. Today’s best image captioning technologies blend encoder-decoder networks and attention mechanisms that allow the systems to detect key image components.

Visual Question Answering

A model participating in visual question answering must provide answers to questions regarding pictures it receives as input. The model needs a comprehensive understanding of images and text queries to effectively perform this task. VQA models utilize cross-attention methods to connect suitable visual features with the provided question text.

Image and Video Search

The search effectiveness for images and videos improves through Vision-Language Models since they accept textual requests merged with visual matching criteria. Users accomplish product searches through two methods which involve either image uploads or written descriptions. The combination of visual contents and verbal searches allows Vision-Language Models to deliver better search outcomes.

Generative Tasks (e.g., Image Generation from Text)

The most interesting function of VLMs exists in their ability to produce visual outputs from text inputs for generative applications. OpenAI’s DALL-E functions as one example of these models that produces realistic images through given text prompts which reveals the creative possibilities behind Vision-Language Models.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

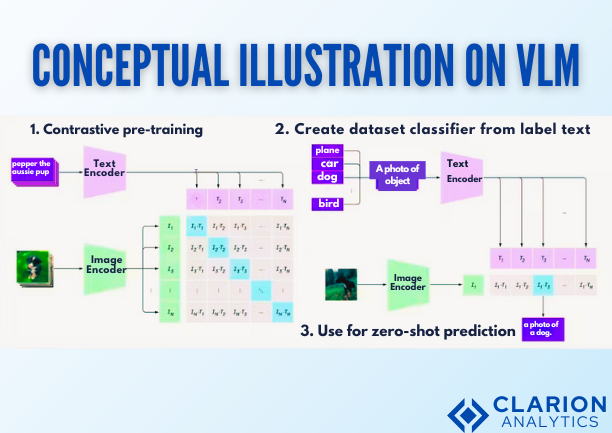

Training Vision-Language Models

Training Vision-Language Models is a multi-step process that ranges from preparing the data to optimization.

Data Preparation and Annotation

Quality data is essential for training efficient Vision-Language Models. Datasets must be annotated carefully to map visual and textual content in a precise way. For instance, the MS COCO dataset is commonly employed for tasks such as image captioning because it comprises images with corresponding descriptive captions.

Loss Functions

VLMs tend to employ task-specific loss functions to improve their performance. Some of the most common loss functions are:

Cross-Entropy Loss: Applied to classification tasks, e.g., VQA or image captioning, where the aim is to predict the right label or caption.

Contrastive Loss: Applied to learning similarities between images and text, e.g., in multimodal retrieval tasks.

Optimization Techniques

Training VLMs demands strong optimization methods, with the use of Adam and Stochastic Gradient Descent (SGD) being very common. Learning rate scheduling and gradient clipping are also utilized for stabilizing training.

Transfer Learning and Fine-Tuning

With the complexity and magnitude of VLMs, transfer learning is commonly utilized. Pre-trained models like BERT for text and ResNet for images are fine-tuned over task-specific data, with considerable reduction in time and resources spent on training.

Evaluation Metrics for Vision-Language Models

Both quantitative and qualitative methods are used to evaluate VLMs.

Quantitative Metrics

Accuracy: Frequently applied in classification tasks such as VQA.

F1-Score: Quantifies the trade-off between precision and recall, particularly in cases where class imbalance is present.

BLEU: Applied in image captioning to evaluate generated captions against reference captions.

CIDEr: Consensus-based image captioning evaluation metric that considers the varying importance of words.

ROUGE: Estimates the n-gram overlap between generated and reference text, commonly applied to summarization tasks.

Qualitative Evaluation

Quantitative measures cannot always reflect the quality of the output of a VLM, particularly in subjective or creative tasks. Human evaluation is important for evaluating the fluency, coherence, and pertinence of generated text and the realism and creativity of generated images.

Future Trends and Challenges

The domain of VLMs continues to evolve at a quick pace as various new trends direct its future development:

VLMs now incorporate multiple types of data including audio files and sensors to develop effective advanced systems.

Scientific teams work on developing VLM training techniques which minimize data requirements beyond current needs.

The demand for transparent and interpretable VLMs rises because they operate in crucial applications such as autonomous driving and healthcare.

Challenges and Limitations

The success of VLMs depends on overcoming multiple obstacles despite their technological capabilities.

The training data of VLMs contains the potential to pass along discriminatory information which generates unjust results. Modern research focuses on eliminating bias from VLMs while maintaining fair operations.

The interpretability of VLMs becomes problematic because solutions based on deep learning work as black boxes. Scientists develop new methods which increase the interpretability of these models so their users can understand how decisions take shape.

Conclusion

Vision-language models are a big step forward in the creation of AI systems that are capable of processing and comprehending multimodal data. From retail to healthcare and everything in between, VLMs are transforming industries across a broad spectrum. Though there are still obstacles to be overcome, such as interpretability and bias, the future of VLMs is highly optimistic, and promising trends such as explainable AI and multimodal learning lay the groundwork for even more sophisticated applications. As VLMs advance, they will open up new possibilities and redefine the way we use technology.

Also Read:

The evolution of multimodal intelligence is driven by strong foundations in language and vision, beginning with Large Language Models 101: NLP Fundamentals for Smarter AI Solutions, which explains how text understanding powers intelligent systems. This progress continues with Vision Transformers: Unlocking New Potential in OCR – A Game-Changer in Text Recognition and Generative Computer Vision: Powerful Foundation Models That Are Revolutionizing the Future, highlighting advances in visual perception. At the architectural level, Mastering Transformers: Unlock the Magic of LLM Coding 101 reveals the shared backbone enabling both vision and language models, while Object Detection Beyond Classification: Exploring Advanced Deep Learning Techniques expands visual understanding beyond simple recognition. Tying these domains together, The Power of Explainable AI (XAI) in Deep Learning: Demystifying Decision-Making Processes emphasizes transparency and trust in complex Vision-Language Models deployed across real-world applications.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us