1. Introduction

NLP stands as the fundamental technology which enables artificial intelligence progress. The technology establishes a road between human communication and machine understanding which produces solutions in chatbots and virtual assistants and content creation tools. Large Language Models have propelled NLP capabilities through their ability to process and create human language at magnitude. The guide delivers fundamental explanations with explanations about essential concepts and Large Language Model architecture

2. The definition of Natural Language Processing (NLP) involves what?

Machines need NLP to understand interpret analyze and produce human language because this capability enables meaningful artificial intelligence interaction with humans. Computers understand human language and generate responses with valuable results through the synergy of AI along with computer science and linguisitics.

Key NLP Tasks:

Text Classification allows machines to group data into arranged categories through predefined divisions which includes spam detection and sentiment analysis procedures.

The analysis of emotions in text series allows machines to identify tone through word groupings for brand observation and customer assistance.

The ability to translate texts through different languages operates in programs such as Google Translate.

The NER task extracts vital entities such as names and dates alongside organizations directly from text data to sort information properly.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

3. The Rise of Large Language Models (LLMs)

Abilities in Natural Language Processing came forth because of Large Language Models. Such training models accumulate extensive datasets to learn about language structure and contextual understanding and semantic nuances within different subjects.

A Brief History:

The OpenAI GPT (Generative Pre-trained Transformer) demonstrated through its series that pre-training enables models to publish human-like and coherent writings.

Due to its bidirectional design BERT managed to interpret language contexts with greater effectiveness than previous models.

Google introduced T5 which processed all NLP operations as a single text-to-text function to achieve streamlined NLP task execution.

4. The foundation principles which drive Large Language Models operate in the following manner

Multiple basic principles allow Large Language Models to operate efficiently when processing language:

Tokenization

The process of tokenization divides text content into small unit portions known as tokens. Tokens used by this process can consist of distinct words or their subunits and individual characters. The process of tokenization enables LLMs to process text data more efficiently because it allows the models to handle limited input sizes and maintain meaningful language patterns through their operations.

Embeddings

Words and phrases find their representation through high-dimensional vector spaces using the embedding technique. The embedding space distributes synonymous words proximally to one another to help the model understand semantic relationships. The inception of Word2Vec and GloVe led to context-sensitive embeddings which now drive BERT and GPT.

Attention Mechanism

Through the attention mechanism models can choose particular elements from an input sequence during their predictive tasks. Through careful weighting of specific tokens the model performs advanced word relation detection even if words are not directly adjacent to each other in the text.

5. Large Language Models Architecture: Transformers Explained

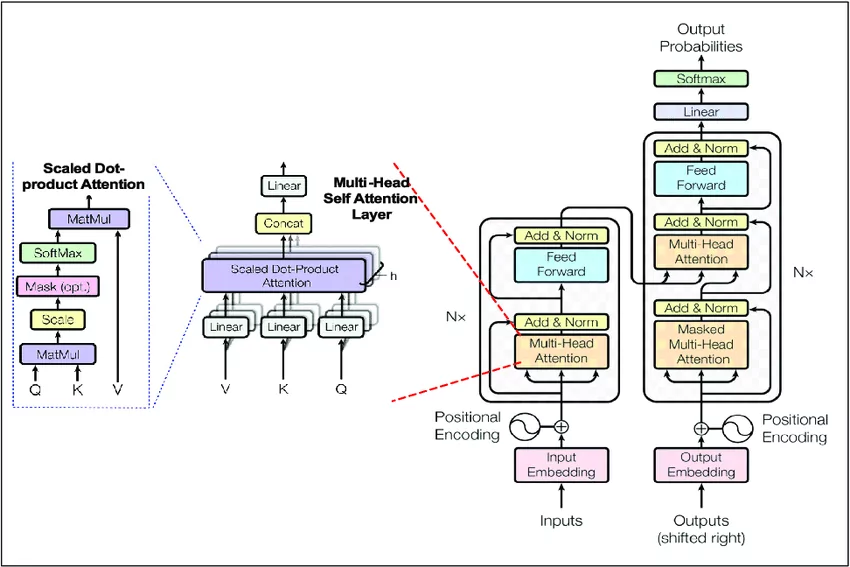

The Transformer Model

Transformer technology functions as the essential foundation for present-day Large Language Models. The Transformer model appeared in “Attention is All You Need” (Vaswani et al., 2017) by abandoning sequential RNN models through parallel processing that delivered both faster training and superior model results.

Components of the Transformer:

The first step involves transforming input text into tokens followed by token embedding conversion.

The parallel processing of transformers requires positional encoding because it serves to maintain token order information in the sequence.

The Self-Attention component divides its analysis across multiple segments of the input sequence to establish diverse linkages among text elements.

Through its application of the feed-forward layer to each token the model achieves non-linear responses while becoming more expressive.

The model combines Residual Connections with Layer Normalization for maintaining stable training operations through output normalization across layers and unobstructed gradient transmission.

A transformer architecture operates through multiple equivalent layers which successively improve the model’s word understanding.

Multi-Head Self-Attention Mechanism

The operation functions through the following sequence:

The system produces three vector types for each token through Query (Q), Key (K) and Value (V).

During the operation the Query vector conducts dot product comparison with all Key vectors representing different tokens to determine attention scores.

Score computations produce probabilities using Softmax function to realize which tokens should be prioritized.

The Value vectors are summed using weighted calculation together with attention scores to produce output values for each token in the sequence.

Through multiple processing heads the model maintains its capability to identify various text-based relationships which improves its contextual and subtle understanding capabilities.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

6. How Large Language Models Process Language

The learning process along with functional stages are essential for LMs as they carry out NLP operations.

6.1 Training Phase

During the training process the model processes large quantities of textual content to update its parameter set for better predictive accuracy. The process of document processing during training phase requires extensive computing resources to handle millions of input documents.

6.2 Fine-Tuning Phase

The specific task customization process is called fine-tuning. To achieve excellence in healthcare-related tasks LLMs go through the fine-tuning process using a dataset of medical records that aligns with the requirements of that specific medical sector.

6.3 Inference Phase

The inference phase executes the trained model to make predictions on information that has not been used for training. During inference the methods of model optimization together with quantization techniques help enhance processing speed and decrease resource utilization.

7. Applications of LLMs in NLP

Industry wise applications of LLMs continue to produce numerous verified usages in real world scenarios.

Assisted by LLMs chatbots and virtual assistants enable automated agents to carry out natural contextual conversations in customer support roles.

Through LLM technology companies produce human-sounding text content on a large scale to handle all types of business documentation from marketing materials to news compositions.

User opinion evaluation through Sentiment Analysis allows businesses to conduct essential analysis of brand sentiment.

LLMs provide multiple language translation functions which helps organizations enhance their accessibility features.

The healthcare sector benefits from these models through clinical diagnostics and diagnostics and patient hospital operations support.

The educational sector uses individualized learning platforms together with automated tutors to modify educational content for students.

8. Challenges in NLP with LLMs

The potent features of LLMs create difficulties along with their advantages.

The learning process of models uses historical data which makes them susceptible to incorporate biases found in that data source. Researchers develop debiasing methods together with ethical guidelines to minimize this tendency.

The ethical risks of LLMs include their potential misuse to create damaging content while distributing wrong information to users. AI practices based on responsibility and ethical guidelines have become essential for solving the problems of these risks.

The training along with deployment of LLMs need substantial computational power and resources.

Image by – aporia

9. Future of LLMs in NLP

The future of LLMs is marked by continuous advancements:

- Smaller, More Efficient Models: Researchers are developing compact models that retain performance, making LLMs more accessible to a broader audience.

- Zero-shot and Few-shot Learning: New techniques allow LLMs to perform tasks without extensive additional training data, opening up applications in low-resource languages and specialized domains.

- Enhanced Multimodal Capabilities: Integrating text, images, and audio data allows for richer, more context-aware models.

- Domain-Specific Models: Industry-focused LLMs are being tailored for fields like law, finance, and healthcare, improving task accuracy in high-stakes environments.

10. Conclusion

Large Language Models have transformed the landscape of NLP, making it possible for machines to understand and generate human language at an unprecedented level. From chatbots to translation services and beyond, LLMs are reshaping how we interact with technology. Despite challenges, the future of LLMs is promising, with advancements focused on improving efficiency, accessibility, and ethical safeguards.

As LLM technology evolves, so does its potential to enhance and innovate across industries, offering exciting opportunities for those interested in exploring the depths of NLP.

Also Read:

To deepen your understanding of Large Language Models, explore Mastering Transformers: Unlock the Magic of LLM Coding 101, which breaks down the architecture powering today’s most advanced NLP systems. Complement this with Attention Mechanism 101: Mastering LLMs for Breakthrough AI Performance to grasp how models interpret context and meaning. For practical customization, Llama Fine-Tuning: Your Step-by-Step Guide walks through adapting models to specific business needs, while Mastering Mitigation of LLM Hallucination: Critical Risks and Proven Prevention Strategies addresses reliability challenges in real-world deployments. Developers can also experiment with The Ultimate 3 LLM Tools for Running Models Locally to gain hands-on experience, and broaden their perspective with Agentic AI vs Generative AI: Unleashing the Future of Intelligent Systems, which places Large Language Models within the evolving AI ecosystem.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us