How Word Embeddings Work

The Concept of a Vector Space:

The Concept of a Vector Space:

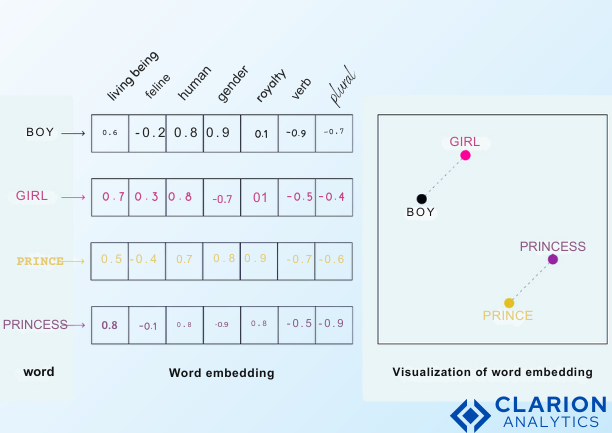

Dense vectors in a high-dimensional mathematical space are used to represent words. Semantically related words are grouped together in this area, while dissimilar words are grouped farther apart. Semantic connections can be revealed through mathematical operations because the proximity between word vectors captures linguistic relationships.

Training Word Embeddings:

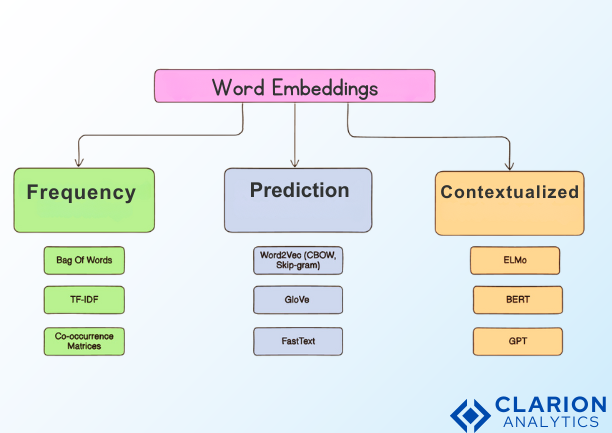

Methods of Contextual Prediction:

Given a target word, skip-gram predicts context words.

Continuous Bag of Words (CBOW): Uses context to predict a target word

Co-occurrence Matrix Techniques:

GloVe: Generates embeddings using global word co-occurrence statistics

records the statistical correlations between words in extensive text collections.

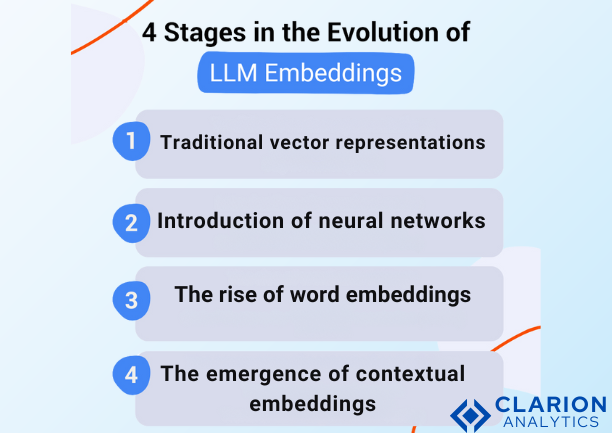

Dynamic versus Static Embeddings:

Embeddings that are static:

For every word, Word2Vec and GloVe produce fixed representations.

Regardless of context, the same word always receives the same vector.

Adaptive Embeddings:

Contextual embeddings such as GPT and BERT

Word representations vary according to the context.

Record subtle differences in meaning between sentences.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

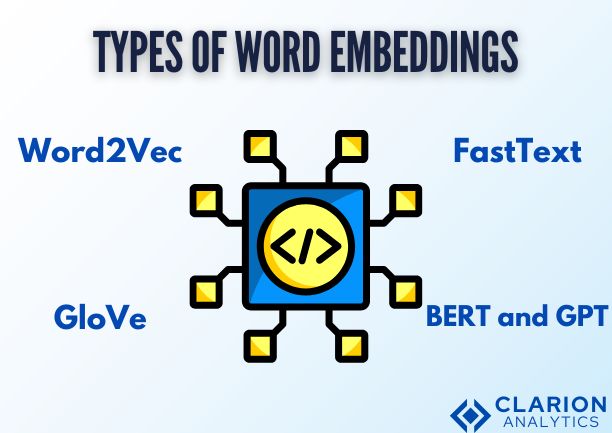

Types of Word Embeddings

Word2Vec:a neural network-based method that predicts a target word from context (CBOW) or surrounding words (Skip-gram) to learn word representations. It creates vector representations that reflect word meanings and relationships by training a shallow neural network on large text corpora.

Word2Vec:a neural network-based method that predicts a target word from context (CBOW) or surrounding words (Skip-gram) to learn word representations. It creates vector representations that reflect word meanings and relationships by training a shallow neural network on large text corpora.

GloVe: The Global Vectors for Word Representation technique generates embeddings by utilizing global word co-occurrence statistics. These statistical patterns are converted into dense vector representations that encode semantic meaning by creating a matrix that shows how frequently words occur together across a corpus.

By dividing words into character n-grams, or subword units, FastText expands on word embedding techniques. By capturing internal word structure, this method aids in the more accurate representation of uncommon and compound words, enabling more complex representations for morphologically rich languages.

Applications of Word Embeddings

Sentiment Analysis: By encoding semantic subtleties, embeddings facilitate sentiment classification and give models a deeper understanding of emotional context than just keyword matching. More accurate sentiment detection is made possible by the closer clustering of words with similar sentiment in vector space.

Search Engines: By comprehending word meanings and relationships, semantic embeddings increase search relevance, allowing:

Contextual matching that goes beyond precise keyword searches

Recognizing the intent of the user

Managing synonyms and associated ideas more skillfully

Virtual assistants and chatbots:

Conversational AI is improved by embeddings by:

Enhancing comprehension of natural language

Making it possible for more contextually relevant responses

Improving the Recognition of Intent

The machine Translation: By mapping semantic relationships between languages, word embeddings fill in linguistic gaps.

Recording subtleties in language

Using semantic understanding to increase translation accuracy

Text Summarization: By determining the semantic significance of words, embeddings assist models in identifying important concepts.

Recognizing context

Section 5: Benefits and Challenges of Word Embeddings

benefits:

Complex word relationships are captured by a rich semantic representation.

scalable for a range of tasks involving natural language processing

Smooth integration with neural network models for deep learning

allows for a more sophisticated comprehension of linguistic contexts

Challenges:

Possible bias in training data that is mirrored in embeddings

Inability to accurately represent uncommon or non-vocabulary words

requires substantial computational resources for embedding generation; requires large, high-quality datasets for effective training; and runs the risk of reinforcing preexisting linguistic and social biases.

Complex embedding spaces with limited interpretability

Section 6: Building Word Embeddings: Tools and Frameworks

Well-liked Tools and Libraries:

Gensim: Focused on Word2Vec implementations

PyTorch and TensorFlow: Adaptable frameworks for unique embeddings

Hugging Face: A platform for models that have already been trained, such as BERT and GPT

How to Make Word Embeddings:

Preparing Data:

Clean up and prepare the text corpus.

Normalize and tokenize textual data

Method of Embedding Choosing:

Select the proper technique (FastText, GloVe, or Word2Vec).

Take into account the particular use case and computational resources.

Assessments of Quality:

Make use of common benchmarks

Evaluate the accuracy of the semantics

Verify performance on the assigned tasks.

Section 7: Future Trends in Word Embeddings

Hybrid Models: Combining neural network representations with rule-based reasoning to create more transparent and understandable AI systems, and integrating symbolic AI with embeddings to improve model interpretability.

Embeddings with multiple modes:

Creating sophisticated embedding methods that incorporate:

Textual depictions

Audio signals and image data

Additional multimedia materials

Developing deeper, more thorough semantic comprehension

Minimization of Prejudice:

Developing embedding methods to:

Reduce the biases that are present in training data

Make word representations that are more inclusive and neutral.

Create algorithmic methods to identify and reduce discriminatory patterns in semantic spaces.

Conclusion

Large Language Models (LLMs) rely heavily on word embeddings, which are essential to contemporary natural language processing. They allow machines to comprehend and process human language with previously unheard-of depth and nuance by converting words into mathematical representations that capture semantic relationships.

Also Read:

To fully grasp the power of Word Embeddings, it’s essential to begin with Large Language Models 101: NLP Fundamentals for Smarter AI Solutions, which lays the groundwork for understanding how modern NLP systems represent language. Building on that foundation, Mastering Transformers: Unlock the Magic of LLM Coding 101 and Attention Mechanism 101: Mastering LLMs for Breakthrough AI Performance explain how contextual relationships enhance embeddings into meaningful representations. For practical application, Llama Fine-Tuning: Your Step-by-Step Guide demonstrates how embedding-based models can be customized for specific domains, while Revolutionizing Data: Harnessing LLMs for Knowledge Graph Construction to Unlock Powerful Insights showcases how semantic embeddings power advanced data structuring. Finally, Mastering Mitigation of LLM Hallucination: Critical Risks and Proven Prevention Strategies highlights the importance of reliability when deploying Word Embeddings in real-world AI systems.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us