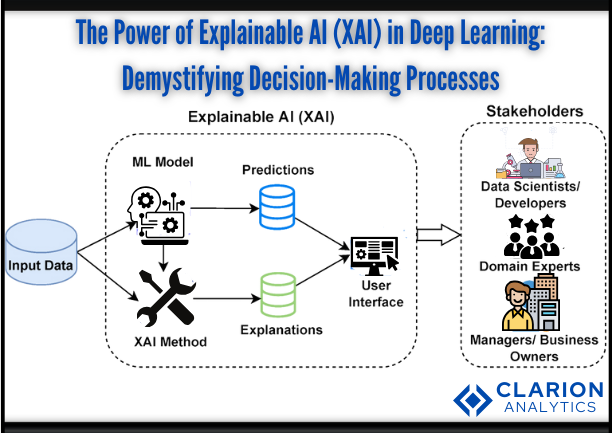

XAI, or Explainable AI provides insight into the classification and decision making processes of AI systems, facilitating human understanding of mechanical reasoning. That branches of AI stand at the crossroads of sociotechnical systems make sense—there’s increasing need and desire for AI integration into society. It is critical to understand how the systems reach their decisions to facilitate acceptance, ensure compliance with industry standards, and to develop a system of trust that is capable of rendering unbiased recommendations. So let us examine The Power of Explainable AI (XAI) in Deep Learning

In the context of deep learning, models are often viewed as “black boxes”—the way they receive, handle, and emit data is not quite clear. The increasingly prevalent lack of openness undermines trust toward emerging technologies and their widespread implementation. From the perspective of software practitioners and CXOs, explainability is not only a concept that falls within the domain of science; it’s a matter of doing business. AI-enabled systems can only be trusted if their rationale is accessible and verifiable by the relevant actors

Importance of Deep Learning Interpretability

Interpretability in deep learning refers to the ability to comprehend and articulate the decision-making process of a model. This is crucial for several reasons:

- Model Adoption and Deployment: When stakeholders can understand how a model works, they are more likely to trust and adopt it. This is especially important in sectors like healthcare, finance, and autonomous driving, where decisions can have significant consequences.

- Debugging and Improvement: Interpretability allows developers to identify and rectify errors, biases, and inefficiencies in models, leading to better performance and reliability.

- Compliance and Ethical Standards: Many industries are subject to regulations that require transparency in decision-making processes. Explainable models help meet these legal requirements and address ethical concerns.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Common Explainable AI Techniques in Computer Vision

In computer vision, Explainable AI techniques play a vital role in elucidating how deep learning models interpret visual data. Three popular techniques are LIME, SHAP, and Grad-CAM.

2.1. LIME (Local Interpretable Model-agnostic Explanations)

LIME technique creates specific explanations for individual predictions from any kind of machine learning model through its local interpretation processes. LIME generates local Logical descriptions through an interpretable linear regression. Computer vision applications of LIME introduce image perturbation to detect how model predictions respond to input image modifications thus determining influential image sections. LIME addresses applications in health diagnoses of medical images and vehicle self-driving detection and surveillance systems for anomaly observation.

2.2. SHAP (SHapley Additive exPlanations)

The game-theoretic approach SHAP provides explanations about the predictions generated from machine learning systems. The method determines specific important values for each feature when predicting a certain outcome. The Shapley values foundation of SHAP enables equitable distribution of feature contributions according to cooperative game theory. The main strength of SHAP includes its capability to produce consistent feature importance measures that work across multiple modeling techniques. The technique provides outstanding functionality for visual explanation in computer vision since it helps scientists identify areas in images that drive model decisions.

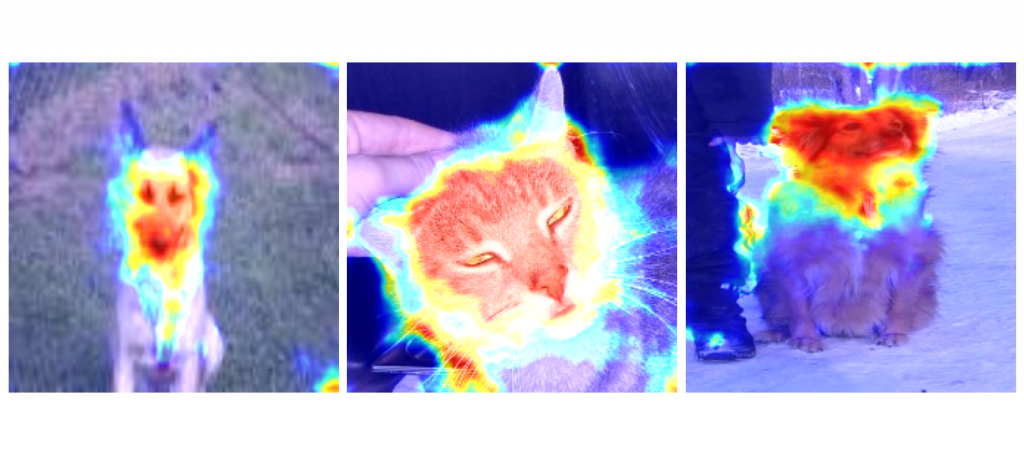

2.3. Grad-CAM (Gradient-weighted Class Activation Mapping)

Grad-CAM exists as a special framework that operates specifically with convolutional neural networks (CNNs) when used in computer vision applications. This technique develops visual descriptions that show which areas in the input image matter most for identifying the model’s answer. A coarse localization map emerges from the gradients of the target class during its flow into the last convolutional layer through Grad-CAM. Grad-CAM serves as a fundamental tool for computer vision tasks where it helps experts understand predicted outputs by showing important image regions for better AI system validation.

Challenges in Achieving Explainable AI

The Explainable AI field contains the following challenges:

Balance between accuracy and ease of understanding: Models that are easier to understand are often much less accurate and vice versa. Achieving harmony among these trade-offs poses a primary problem in Explainable AI.

Complexity and scalability: Providing clear, concise explanations becomes increasingly difficult as models become more intricate. It is necessary to ensure the applicability of the techniques for Explainable AI to large datasets and complex models.

Ethical considerations: Explainable AI is important for providing just outcomes that do not reinforce any biases or unjust discrimination. Ethical perspective must be embedded into the design and application of Explainable AI techniques.

Real-world Applications of Explainable AI in Deep Learning

Deep learning features increasingly makes Explainable AI accessible to many industries:

Healthcare: Drawn from the explainable AI techniques, the diagnosis from medical imaging is integrated so that it can aid the decision-making processes of doctors.

Finance: In banking and insurance industries, Explainable AI allows people to understand the models for credit scoring and fraud detection, making the processes fair and more transparent.

Autonomous vehicles: The workings of self-driving cars are enhanced by XAI because the decisions made by the cars require explanation for more safety and reliability.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Best Practices for Implementing Explainable AI

To properly incorporate XAI in developing deep learning models, pay attention to these recommendations:

Employ Correct Tools and Technology: Leverage available frameworks to explainable artificial intelligence (XAI) technologies such as LIME, SHAP, and Grad-CAM, by embedding them from the initial stages of explanation design from the target models.

Engage All Stakeholders: Incorporate all stakeholders, which include developers, domain professionals, and end users, in the process so that those who will be provided explanations will have their expectations met.

Ongoing Assessment and Feedback: Continue providing explanations using any of the many XAI technique’s tools, and re-evaluate their accuracy and relevance as your models change.

Future Trends in Explainable AI

Developing Technologies in Explainable Artificial Intelligence

The field of XAI is still maturing but with many innovative technologies integrating aspects like intelligent system interfaces which have user-centric focus providing simplistic, but effective real-time elaborations.

Elaborated Automation: Explanation frameworks center around distinct processed for automated machine learning (AutoML) which could result in reduced customization.

Increased Compliance Regulations: With emerging policies increasing scrutiny on AI practicability applying XAI will become a necessity necessitating more refinement.

Conclusion

Building trust, enhancing the interpretability of deep learning models, and making sound choices all require explainable AI. Developers and CXOs can ensure transparency, satisfy regulatory demands, and promote the uptake of AI technologies by utilizing XAI techniques. Better decision-making across industries will be helped by XAI’s growing importance as AI grows.

Also Read:

As organizations embrace responsible innovation, Digital Transformation and ESG: 101 sets the foundation for aligning technology with sustainability and governance goals, while Industry 5.0’s Promise: The Convergence of Humans and Machines highlights a human-centric approach to intelligent systems. Ensuring trust and reliability in advanced AI, Mastering Mitigation of LLM Hallucination: Critical Risks and Proven Prevention Strategies addresses key challenges in deploying large models at scale. Real-world impact is further demonstrated through AI in Manufacturing: Transforming the Future, where intelligent automation is reshaping industrial operations, complemented by Vision Transformers: Unlocking New Potential in OCR – A Game-Changer in Text Recognition, which showcases advancements in visual understanding. Tying these innovations together, Powerful Ways Generative AI is Reshaping the Future of Industries illustrates how AI-driven transformation is accelerating across sectors.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us