Mastering the art of training deep learning model can be a daunting task, especially when working with complex algorithms like YOLOv8. In this blog, we’ll dive into 10 Tips To Train Deep Learning Model Using Yolo for your object detection needs.

YOLOv8 is a state-of-the-art deep learning model specifically designed for object detection. It belongs to the YOLO (You Only Look Once) family of models, known for their speed and efficiency. Yolov8 is a fast and efficient object detection algorithm, which means it is faster in processing and also has good accuracy, making it ideal for real-time object detection tasks.

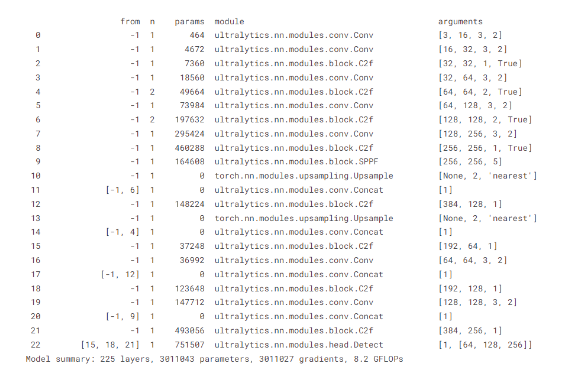

Yolov8 has an efficient architecture that processes the images very efficiently with fast speed and good performance.

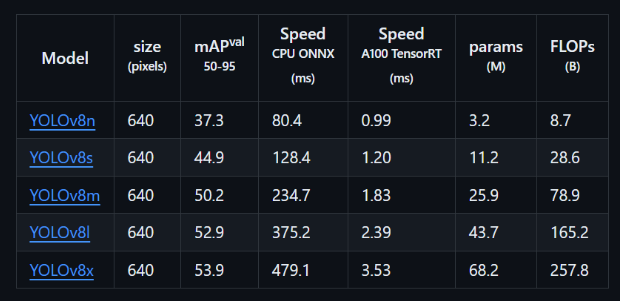

The Yolov8 family offers a range of models (yolov8n, yolov8s, yolov8m, yolov8l, and yolov8x) that provide a tradeoff between speed and accuracy.

Yolov8 models can be used for tasks like detection, segmentation, classification, pose estimation, and oriented object detection. Detection involves detecting objects in images or videos. Segmentation is a task that involves segmenting an image into different regions based on the content of the image. Classification involves classifying an image into different categories. Pose estimation involves detecting specific points in an image or video, like body points (head, toe, elbow, etc.). Oriented object detection goes a step further than regular object detection by introducing an extra angle to locate objects more accurately in an image.

By combining the power of image processing and the advanced capabilities of YOLOv8, you can unlock a wide range of possibilities in various domains, including autonomous vehicles, robotics, and security systems.

This comprehensive guide offers a few key tips to navigate the YOLOv8 training process effectively.

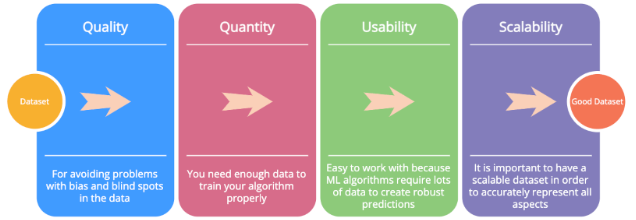

1. A Good Dataset

Ensure your training dataset reflects real-world variations in object sizes, positions, lighting, and backgrounds. This helps the model generalize better to unseen data.

The number of images in dataset and the quality of images both matter. If the size of the object that is needed to be detected is large, a low number of good-quality images can give good results, while if the size of the object that is needed to be detected is small, the number of images should be higher.

Example – When I was working with the PPE safety dataset, there were two objects to detect one was a helmet, and the other was safety gloves. Helmets are bigger in size than gloves, so having a dataset of ~1000 or ~1500 images of helmets was giving good results, while for gloves, even the dataset with ~3500 images was not that accurate on detections of safety gloves.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

2.High-Quality Labeling

Precise object boundary annotations are crucial. Invest in ensuring accurate labeling to avoid introducing errors into the model.

All the needed objects should be annotated in all the images of the dataset to ensure good results. As some of the objects are annotated and some of the same objects are not annotated, this can cause problems as the training will not be good.

I was working with helmet detection; some of the images were annotated with helmets, while others contained helmets that were not annotated, so the training model was not able to recognize helmets as they were annotated in some images but not annotated in others. So the results were very poor. So the object should be annotated in all the images of the dataset.

Use the Roboflow platform to label the dataset, as it provides very handy tools to annotate the objects and is easy to use.

If using autodistill to generate the dataset, first upload the images to roboflow, then label the dataset using autodistill, and then, after labeling, review the images and their labelling to ensure good quality labelling.

Quality is superior to quantity; we must focus much on quality.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

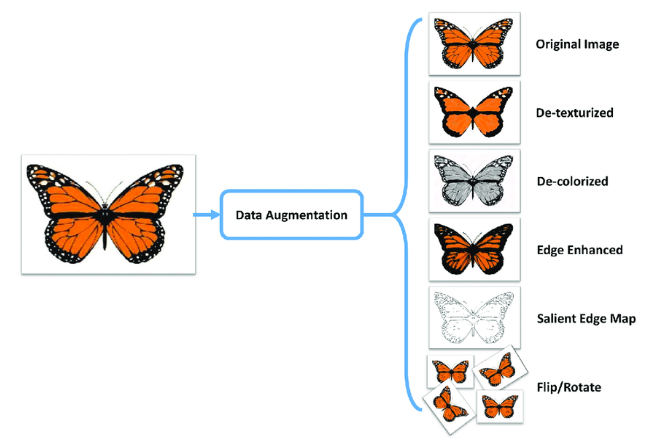

3. Data Augmentation

Apply data augmentation techniques (random cropping, flipping, and color jittering) to increase dataset size and improve model robustness to variations.

First, ensure the quality of images and labels in the dataset, then augment the data to increase the size of our dataset as per need. Many times, we have a small number of images in the dataset to work with, and the training results for these images are not good, so one technique to increase the size of the dataset is to augment the dataset by flipping the images, random cropping the images, and color jittering.

We can flip the images to increase the size of the dataset, but we should consider flipping only once.

We should use random cropping very carefully, as it cannot be helpful sometimes. For example, if we have one small object in an image and use random cropping, but the object is not in the image, then the cropped image is of no value to us.

We should not use color jittering if it is not absolutely necessary to increase the size of the dataset, as color jittering can decrease the quality of the image in the dataset, which will eventually decrease the quality of the dataset.

4. Model Variant Selection

YOLOv8 offers various sizes (yolov8n, yolov8s, yolov8m, yolov8l, yolov8x) with accuracy-speed trade-offs. Choose the variant that best suits your requirements and hardware.

Yolov8n and Yolov8s should be used when you don’t have good hardware to work with. Yolov8n and Yolov8s models should be used to see the initial performance and what changes are needed to fine-tune the model.

Yolov8m needs good hardware (at least 12 GB of GPU) to train the custom model. Yolov8m provides good detection with good accuracy. I used Yolov8m in most of the models, and these models worked perfectly.

Yolov8l models almost use twice the FLOPs of Yolov8m models and also have almost 20 million more parameters. So I use the Yolov8L model only when the results of the Yolov8M model don’t give good results.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

5. Batch Size Matters

Batch size significantly impacts training efficiency. Start with a reasonable size and adjust it based on your hardware limitations and observed performance.

The batch size refers to the number of images processed by the model during the epoch. Imagine we have a large dataset of images for training object detection. The training process doesn’t feed the entire dataset at once. Instead, it iterates through the data in smaller batches, calculates the error (loss) for each batch, and updates the model’s weights based on that error.

Larger batch size trains faster, utilizes hardware better, but needs more memory. Smaller batch sizes have lower memory requirements but trains slow.

Optimal batch size depends on several factors, like size of dataset, model complexity etc.

6. Hyperparameter Fine-Tuning

While YOLOv8 provides default hyperparameters, fine-tuning them can further improve performance.

Using different optimizers like Adam, SGD (Stochastic Gradient Descent), and RMSprop to check which optimizer gives the best result.

Using the higher learning rate training can be faster but the accuracy. A slow learning rate can give may not provide good results. So a good optimal learning rate is required for good results.

7. Training Progress Monitoring

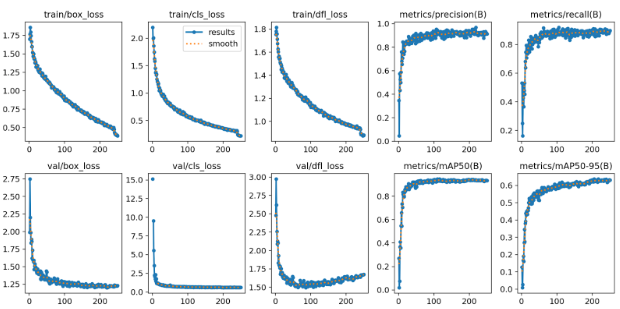

Regularly monitor training metrics like loss, mAP (mean Average Precision), and other relevant metrics to track progress and identify potential issues.

The losses should decrease with the epochs and all the metrics should increase with the epochs.

After a certain number of epochs, when the losses and metrics seem to be saturated, no more epochs in training will help to increase the accuracy of model so we should use early stopping at these point.

In the above figure, losses are decreasing with time, and all the other performance metrics are increasing with time, which is a good thing.

But after 100-120 epochs, the losses and metrics seem to saturate, so training the model after these epochs will not help the accuracy of the model.

8. Saving model after certain number of epochs to test the accuracy

Saving the model at a certain interval of epochs so we can check the progress by making predictions using these saved models.

Let just say saving model after every 10 epochs and testing it on our requirements can save us time and resources as many a times our dataset is not good so the trained model will not give good results. Checking every 10 epochs can give us idea that model is not getting trained very good so we have to prepare a good dataset first then train the model.

While training yolov8 custom model we can save weights of training model using save period variable.

9. Early Stopping

Implement early stopping to prevent overfitting. Stop training if validation performance doesn’t improve for a certain number of epochs.

When the losses and performance metrices are getting saturated then extra training will not help in model accuracy so its better to stop training which is called early stopping.

In yolov8 custom training we can use variable ‘patience’ to choose the number of epochs for early stopping. By default patience=100 that means if no improvement in 100 epochs then training will stopped.

![]()

10. Transfer Learning

Fine-tune a pre-trained YOLOv8 model on your custom dataset for faster convergence and potentially better performance.

Here we are using yolov8n for training our custom model.

The benefits of using yolov8 transfer learning is faster training, improved performance as pretrained models have a better understanding of object features, and less data required for good results on small datasets.

11. Hardware Considerations

Ensure the hardware (GPU or TPU) has sufficient memory and processing power to handle the chosen model size and training requirements.

At least 16 GB of GPU is required to train the custom yolov8 models. The yolov8n and yolov8s models require up to 8 GB of GPU memory to train the custom yolov8 models. The yolov8m takes up to 10 GB of GPU memory, and yolov8l takes up to 13 GB of GPU memory to train the custom Yolo models.

The more cuda cores the GPU has, the faster will be training. Nvidia t4 16GB has ~2500 cuda cores and Nvidia p100 16gb has ~3500 cuda cores. Training the same custom yolov8 model on p100 will take less time than t4 but the p100 is more expensive GPU.

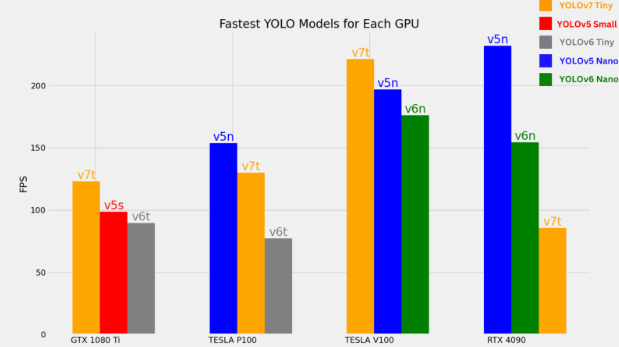

In the above figure, we can see the FPS on different GPUs of different Yolo models. The RTX4090 is a very powerful GPU, so it yields a better amount of FPS than the other GPUs.

12. Class Imbalance Handling

If your dataset has imbalanced classes (some objects appear much less frequently), consider oversampling or under sampling techniques to address it.

When we are trying to classify positive and negative class detection for an object, we should have a balance between both positive and negative classes.

As working with gloves and no gloves we have less number of labelling for no gloves so our model was not able to detect no gloves.

After managing the class imbalance, which means balancing the positive and negative classes for gloves and no gloves the results were satisfactory.

13. Model Evaluation and Analysis

Evaluate the trained model on unseen data using relevant metrics like mAP, precision, and recall to assess its generalizability and performance in real-world scenarios.

Many times our model gives good accuracy while training, as it is overfitting on the given data and underperforming in the application. So using different test data, we should check the accuracy of our model. If our model performs well on test data, it will also perform well in real-world scenarios.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Also read about – Automating Shelf Stocking In Pharmacies With Deep Learning

Also read about – How LLMs Have Changed UI And UX In Software Engineering

Also read about – AI in manufacturing