Mastering YOLO and Its Importance

YOLO (You Only Look Once) is a seminal object detection algorithm in computer vision, introduced by Joseph Redmon et al. mastering YOLO’s core innovation lies in its ability to perform object detection with a single neural network pass, unlike previous methods that required multiple stages or passes. This characteristic allows YOLO to deliver high-speed and real-time performance, making it invaluable for applications such as autonomous driving, surveillance, and robotics. Learn more about mastering yolo.

Key Innovations:

- Single-Pass Detection: YOLO detects objects in a single forward pass of the network, resulting in fast processing times.

- Unified Architecture: Combines object classification and localization in one model, simplifying the pipeline and reducing computational overhead.

YOLO Versions and Their Evolution

YOLOv1 (2016)

Overview:

- Authors: Joseph Redmon, Santosh Divvala, Ross Girshick, and Ali Farhadi.

- Paper: You Only Look Once: Unified, Real-Time Object Detection.

- Architecture: Utilizes a single convolutional network to predict bounding boxes and class probabilities directly from the full image.

Advantages:

- Real-Time Speed: Achieves detection speeds up to 45 frames per second (FPS) on a GPU.

- Simplicity: The network architecture is straightforward and easy to implement.

Disadvantages:

- Localization Accuracy: Struggles with precise bounding box localization due to the coarse grid cells.

- Small-Object Detection: Less effective at detecting small objects due to the fixed grid size.

YOLOv2 (2016) – YOLO9000

Overview:

- Authors: Joseph Redmon and Ali Farhadi.

- Paper: YOLO9000: Better, Faster, Stronger.

- Architecture: Introduces anchor boxes, batch normalization, and a new backbone network (Darknet-19).

Advantages:

- Improved Detection: Better performance with anchor boxes for more accurate bounding box predictions.

- Large Number of Classes: Can detect over 9000 object categories by combining COCO and ImageNet datasets.

Disadvantages:

- Complexity Increase: Slightly more complex compared to YOLOv1, impacting training and inference times.

- Speed Trade-Off: Although faster than many methods, YOLOv2 is slower than YOLOv1.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

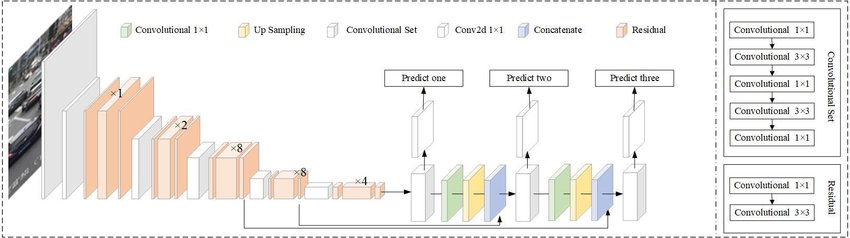

YOLOv3 (2018)

Overview:

- Authors: Joseph Redmon and Ali Farhadi.

- Paper: YOLOv3: An Incremental Improvement.

- Architecture: Uses Darknet-53 as the backbone, incorporating residual connections and multi-scale predictions.

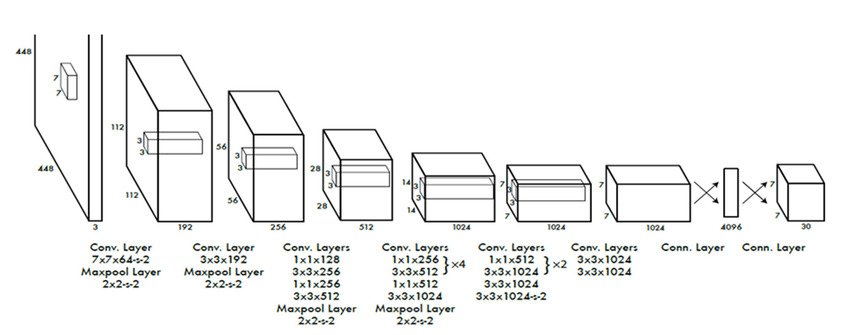

Image by research gate

Advantages:

- Multi-Scale Detection: Improved detection of objects at various scales due to predictions from multiple layers.

- Enhanced Accuracy: Better performance in terms of object classification and localization.

Disadvantages:

- Model Size: Larger model size compared to YOLOv2, leading to increased memory usage.

- Inference Speed: While faster than many older methods, YOLOv3 is slower compared to YOLOv4 and YOLOv5.

YOLOv4 (2020)

Overview:

- Authors: Alexey Bochkovskiy, Chien-Yao Wang, and Hong-Yuan Mark Liao.

- Paper: YOLOv4: Optimal Speed and Accuracy of Object Detection.

- Architecture: Incorporates CSPDarknet53, PANet, and additional techniques such as Mish activation function and CIoU loss.

Advantages:

- State-of-the-Art Performance: Achieves high accuracy and speed, outperforming previous versions on benchmarks like COCO.

- Optimizations: Includes a variety of optimizations such as CSPNet and PANet, enhancing feature extraction and aggregation.

Disadvantages:

- Complexity: More complex architecture with increased training and inference requirements.

- Hardware Demands: Requires more computational power and memory, particularly for training.

YOLOv5 (2020) – by Ultralytics

Overview:

- Authors: Ultralytics (not affiliated with original YOLO authors).

- Paper: YOLOv5 GitHub Repository (not a formal paper, but detailed documentation available).

- Architecture: Introduces multiple model sizes (small, medium, large) for flexibility, using PyTorch for implementation.

Advantages:

- User-Friendly: Provides easy integration and use with a well-documented PyTorch implementation.

- Versatility: Includes several model sizes for different application needs and hardware constraints.

Disadvantages:

- Community Support: Not developed by the original YOLO team, which may impact alignment with cutting-edge research.

- Performance Variability: While highly effective, it may not always match the latest advancements of official YOLO versions.

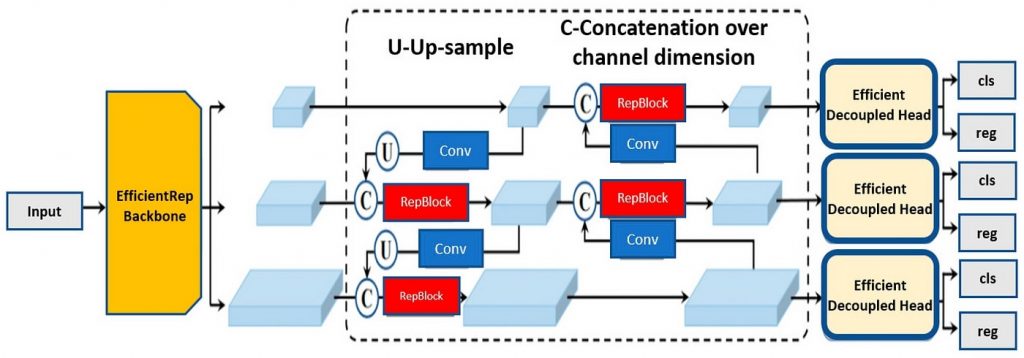

YOLOv6 (2022)

Overview:

- Authors: Meituan.

- Paper: YOLOv6 GitHub Repository.

- Architecture: Focuses on optimizing both speed and accuracy, leveraging advanced design features.

Advantages:

- Efficiency: Achieves high accuracy while maintaining efficient computational requirements.

- Optimized Design: Includes design optimizations for better performance in real-world applications.

Disadvantages:

- Newer Model: As a relatively new release, it may have less community support and fewer resources.

- Integration Efforts: May require additional effort to integrate into existing systems compared to more established versions.

YOLOv7 (2022)

Overview:

- Authors: Not officially associated with the original YOLO team.

- Paper: YOLOv7 GitHub Repository.

- Architecture: Builds upon YOLOv6 with further enhancements to detection performance and robustness.

Advantages:

- State-of-the-Art Performance: Provides cutting-edge performance with enhancements in robustness and detection accuracy.

- Advanced Techniques: Utilizes the latest advancements in object detection technologies.

Disadvantages:

- Increased Complexity: More complex architecture and higher computational demands.

- Hardware Requirements: Requires substantial hardware resources for optimal performance.

Image by folio

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Setting Up the Environment for mastering YOLO

Prerequisites

- Hardware: High-performance GPU (e.g., NVIDIA RTX 3080 or better) for training; sufficient RAM (at least 16 GB) and storage (SSD preferred).

- Software: Operating System (Windows or Ubuntu), Python 3. x, CUDA (for GPU acceleration), and cuDNN (for optimized performance).

Installing Necessary Libraries

- OpenCV: Install via pip install opencv-python for image processing and computer vision tasks.

- Darknet: Clone the Darknet repository and compile it using CMake and a suitable compiler.

- Other Dependencies: Install additional Python libraries such as numpy, scipy, and matplotlib as needed.

Mastering YOLO training: Step-by-Step Guide

Data Collection and Annotation

- Gathering Datasets: Use datasets relevant to your application. Popular datasets include COCO, Pascal VOC, and custom datasets.

- Annotation Tools: Use LabelImg or similar tools to annotate images. Annotations should be in YOLO format (text files with class labels and bounding box coordinates).

Configuring YOLO for Training

- Configuration Files: Modify .cfg files for your specific YOLO version to set parameters such as the number of classes and filters.

- Architecture Setup: Choose the appropriate YOLO version and adjust the network architecture to fit your training needs.

Initiating the Training Process

- Start Training: Use training commands like ./darknet detector train cfg/coco.data cfg/yolov3.cfg darknet53.conv.74 for YOLOv3.

- Monitor Logs: Review training logs for metrics like loss and accuracy. Adjust parameters based on the training progress.

Mastering YOLO validation Models

Understanding Validation Metrics

- mAP (mean Average Precision): Measures the average precision across all classes and is a key metric for evaluating object detection performance.

Running Validation Tests

- Execute Tests: Validate your model on a separate test dataset to ensure it generalizes well to unseen data.

- Interpret Results: Analyze performance metrics and adjust training parameters if needed to improve accuracy.

Mastering YOLO Deployment in Production

Choosing the Right Deployment Platform

- Cloud Services: Utilize cloud platforms like AWS, Azure, or Google Cloud for scalable and flexible deployment options.

- Edge Devices: Deploy on edge devices such as NVIDIA Jetson for real-time applications where on-device processing is crucial.

Integrating YOLO with Applications

- API Integration: Integrate YOLO models into applications using APIs or directly embedding the model into your application stack.

- Scalability and Latency: Optimize deployment for scalability and low latency to meet application performance requirements.

image by Research Gate

Optimizing and Mastering YOLO for Better Performance

Hyperparameter Tuning

- Adjust Parameters: Experiment with hyperparameters such as learning rate, batch size, and momentum to enhance model performance.

Model Pruning and Quantization

- Pruning: Reduce model size by removing less important weights and neurons.

- Quantization: Convert model weights to lower precision (e.g., INT8) to speed up inference and reduce memory usage.

Common Challenges and Solutions

- Overfitting: Mitigate overfitting by using data augmentation techniques and regularization methods like dropout.

- Performance Variability: Ensure a diverse and high-quality dataset to address variability in detection performance.

- Deployment Issues: Test thoroughly on target hardware and optimize for resource constraints to address deployment challenges.

Future of YOLO in Computer Vision

- Advancements: Keep an eye on ongoing research and updates in mastering YOLO algorithms for the latest improvements and techniques.

- Emerging Trends: Explore new application areas, including autonomous driving, augmented reality, and advanced surveillance systems.

Conclusion

Mastering YOLO’s impact on object detection has been transformative, combining speed and accuracy in a unified framework. By understanding the various mastering YOLO versions and their respective advantages and limitations, developers can leverage YOLO’s capabilities to build advanced computer vision applications and stay at the forefront of technological advancements.

Also Read:

Mastering YOLO is a crucial step in building high-performance computer vision systems, beginning with practical guidance from 10 Tips To Train Deep Learning Models Using YOLO, which focuses on improving accuracy and efficiency. As detection capabilities expand, Object Detection Beyond Classification: Exploring Advanced Deep Learning Techniques highlights how modern models move beyond simple labeling, supported by foundational tools explained in Master OpenCV 101: Unlock the Power of Computer Vision. Looking ahead, Unlocking the Future of Semantic Segmentation: Breakthrough Trends and Techniques showcases pixel-level understanding, while Generative Computer Vision: Powerful Foundation Models That Are Revolutionizing the Future explores next-generation vision systems. Bringing these models into real-world applications, RESTful APIs – Optimizing Deep Learning Model Deployment demonstrates how optimized deployment completes the end-to-end computer vision workflow.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us