![1[1] importance of securing API's](https://clarion.ai/wp-content/uploads/2024/08/11.png)

A deep learning API is a specialized interface that allows an app to interact with machine learning models and algorithms. These APIs can even be used to abstract the machine learning models, which will make them easier to develop and use for developers who may not have a great deal of knowledge about AI or data science. Through the use of such APIs, developers can elevate AI functionalities in their apps, e.g., image recognition, natural language processing, and predictive analytics, thus its important to learn about Securing Deep Learning API.

Deep Learning APIs form the backbone of multiple applications in today’s data-driven world. For example, they support recommendations in e-commerce, penalize content delivery in a media streaming platform, and introduce new user experiences through voice and image recognition in mobile apps. For companies, the reliance on AI technology to compete will only increase the demand for user-friendly, scalable, and efficient Deep Learning APIs.

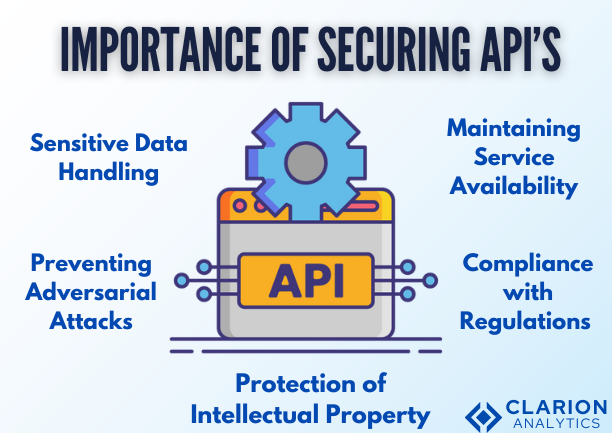

Importance of Securing deep learning APIs

Securing Deep Learning APIs is essential for its role in the processing of very delicate and costly models exposed to the public by the use of deep learning techniques. Here’s why security is paramount:

- Sensitive Data Handling: The vast majority of Deep Learning APIs have as their source the kinds of delicate data that the general public may consider as belonging to four broad categories such as privacy, finance, health, and business. Such data breaches are quite likely to turn the victim into a part of illegal processes or even criminal punishment.

- Protection of Intellectual Property: The deep learning APIs give access to are in fact great intellectual properties (IP). In most cases, these models result from making big investments in research, data collection, and training.

- Preventing Adversarial Attacks: Deep learning Apis is particularly susceptible to adversarial attacks, which are kinds of attacks where the malicious actors intelligently modify the data inputs in a way that a machine learning model incorrectly predicts the outcome.

- Maintaining Service Availability: As a rule, APIs are made available on the Internet, leaving them in danger of Distributed Denial of Service (DDoS) attacks, which cybercriminals use by bombarding the API with a huge amount of traffic in order to overload it and force it to crash

Understanding Deep Learning API Security

Deep Learning APIs are basically tools that are used to support the operations of the AI and make the applications work independently. These deep learning APIs eliminate the need for users to have an understanding of the deep learning models and bring them close to the AI applications, enabling developers to use AI technology without the necessity of understanding machine learning or data science thoroughly.

Deep Learning APIs typically are equipped with functionalities like:

- Model Inference: The API performs the processing of the input data (for instance images, text, or audio) and assigns the predictions or classification results based on the pre-set deep learning model.

- Data Processing: APIs may be equipped with certain functions for pre-processing input data like normalization, resizing, or encoding so that the input is in the right format for the model.

- Interpretation of Results: Certain deep learning APIs supply assets in the shape of heat maps or features that are vital to the model for the investigation and explanation of the model’s predictions.

- Scalability and Deployment: Deep Learning APIs routinely come with features allowing models to be deployed at scale, which means the system is capable of processing a large number of requests with low latency and facilitates proper load balancing and caching as well as parallel processing.

Security Risks Associated with Deep Learning APIs

Deep Learning APIs, despite having ample computing power at their disposal, have their own disadvantages, and the various security threats that they may face range from hacking to spying on their data. These threats are serious and can have very bad effects if they are not dealt with the right way:

- Data Breaches: One of the most common threats is unauthorized entry to the data that the AI is processing. It can include people’s personal information, some business-related data, or other confidential information.

- Model Tampering: The deep learning models themselves can be the most vulnerable targets for sabotage. Attackers can either alter the model’s parameters or the training data such that the model starts to give incorrect predictions. It can be extremely risky if this occurs in critical applications such as medical diagnosis or self-driving cars.

- Adversarial Attacks: These attacks are classified as complex types of breaches, in which hostile parties subtly manipulate the model’s input with the intention of deceiving it into providing the wrong forecasts.

Best Practices for Securing Deep Learning APIs

1)Authentication and Authorization

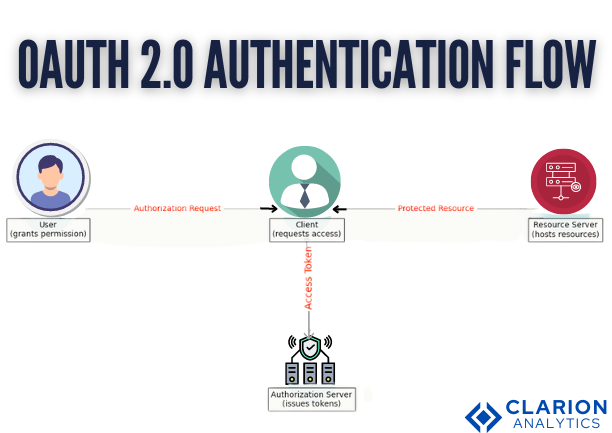

Implementing OAuth 2.0 and deep learning API keys

- Let’s discuss both methods as both are important. Authentication comes first, so it is the main defense line for securing the Deep Learning APIs. OAuth 2.0 is by far one of the strongest and most reputable platforms that help in the protection of APIs by giving the server the authority to access resources in a manner that prevents user credentials from being exposed. It functions via the use of access tokens that a user gets only after he/she authenticates and gets the access grant.

- Whilst API keys are presented in a succinct manner, they too, should be made with caution and be used wisely. They serve as a means to check the verification as to which project, user, or app is making the call. Nonetheless, API keys are the last payment method used in the synthesis. In the future, they should be checked and should be temporarily disabled. Other technologies like OAuth 2.0 can also be included.

Role-based access control (RBAC)

- RBAC access management is the management of API resources based on the roles of the assigned users. This is important in that the needs of users are met. Thus, for example, the creator of the “viewer” role may only be able to see the results, but not alter or delete data, while an “admin” can perform all operations.

2)Data Encryption

Importance of data encryption in transit and at rest

- As a matter of fact, Encryption is essential for ensuring that sensitive data is not proper to unauthorized persons. Data encryption is thus a tool that ensures that even if malicious forces have gained access to the data that is intercepted or blocked, they can’t read it before they possess the proper decryption key.

Implementing SSL/TLS

- SSL is an abbreviation for Secure Sockets Layer, while Transport Layer Security or TLS is a protocol for encrypted communications between web browsers, or web servers and APIs. Implementing SSL/TLS on your Deep Learning API plan means that all data exchanges between the client and server are encrypted. It helps to clarify the identity of the server.

3)Input Validation and Sanitization

Techniques for preventing injection attacks

- The requirement of input validation proves very important in protecting the API from injection attacks, which occur when a hacker inputs the API with malicious information to deceive it to execute wrong commands. Common injection attacks include SQL injection, command injection, and XML injection.

Validating inputs to avoid adversarial attacks

- In the case of adversarial attacks in deep learning, the so-called impossible inputs are the inputs that are manipulated to produce outputs that are different from the expected one and we as the model says.

- Inputs can be normalized in different ways, such as manipulating, transforming, and expanding topics, eliminating noises, and using training data examples where the model is sensitive to adversarial attacks but still makes correct predictions by confronting the model with a set of counterexamples of the adversarial technique.

4)Rate Limiting and Throttling

How to rate limiting protects against abuse

- Rate limiting is a technique implemented to regulate the number of requests sent by a client to an API within a specific time frame. By way of reducing the number of requests, the rate limiter manages to shield the API from despotic attacks e.g. Denial of Service (DoS) attacks whereby hundreds of requests are being forwarded to the server thus making the server inoperable.

Setting up throttling mechanisms

- Throttling and rate limiting are the two methods developers use to prevent the server from being overloaded. Rate limiting is just a totally independent way to keep the traffic under control but when it is not a solution for a specific situation you should use throttling.

5)Regular Security Audits and Vulnerability Assessments

Conducting periodic security reviews

- Since regular security checks are the most effective way to identify and remove vulnerabilities in Deep Learning APIs, it is vital to conduct them. These appraisals of the API have to look at every possible angle including code, infrastructure, and processes to see that the security checks correspond to the best practices.

Tools and techniques for vulnerability scanning

- OWASP ZAP, Burp Suite, and Nessus are the premiere automated tools in this area. These tools, which one can use for example to scan APIs for vulnerabilities, provide checks for familiar security issues like poor authentication, transmission of insecure data, injection vulnerabilities, etc.

6)Implementing Secure Code Practices

Guidelines for writing secure code

- The developers of your application should indeed exercise safe coding strategies to reduce the risk of security bugs in your API. Practicing secure coding development entails developers to commit to guidelines as follows:

- Instead of embedding their passwords directly into the app source code, they should enter and store them in environments or vaults. Where an insecure API is executed, a safer substitute should be implemented.

- Proper error handling: Make sure that the error does not expose the internal workings of the server or the data of the user to an attacker.

- Logging and monitoring: Logging of the system must be implemented for both critical actions and security events, and after that, check them for suspicious activities.

Common coding pitfalls and how to avoid them

- There are several myths in coding that can lead to safety failures of data such as:

- Buffer Overflows: The input should be checked properly for its length before processing starts so that it is coded and handled robustly with no data going out of the memory in the case of a buffer overflow.

- Improper Exception Handling: Make sure to avoid the presentation of sensitive data as exceptions. Also, do not miss to handle all conditions by providing a graceful presentation of error messages if required because the user probably doesn’t know what caused the exception.

Case Studies and Real-world Examples

a)Successful Security Implementations

-

Google Cloud’s AI PlatformOverview:

Google Cloud’s AI Platform is a very popular and powerful service that allows machine learning and artificial intelligence (AI) as well as deep learning to be used in businesses. With a primary emphasis on security, Google has to a great extent met the task of safeguarding its APIs and data, through a comprehensive program.

Security Measures:

- Authentication and Authorization: Google Cloud uses OAuth 2.0 for safe API authentication, coupled with Identity and Access Management (IAM) to grant fine-grained access control.

- Data Protection: Data at rest is encrypted with the strongest standard (AES-256), while the data in transit is secured by TLS/SSL.

- Input Validation: Through input validation as the last line of defense; Google applies the most stringent security mechanisms and guards against common types of vulnerabilities such as SQL injection, cross-site scripting (XSS), and many others.

- Rate Limiting: In the mechanism of preventing abuses and controlling them, each API call is regulated and monitored to ensure that fair utilization is performed.

Lessons Learned:

- Importance of Layered Security: The GIS plan is an action plan focused on the standard of digital safety – which requires even two safety layers: administrative (role-based access) and technical (encryption, rate limiting), as Google experiences.

- Ongoing Monitoring and Adaptation: The data security landscape is complex and rapidly changing. With the ever-present threat of accompanying continually emerging security threats, continuous monitoring and prompt adaptation to current cyber-security are recognized as fundamental to ensuring organization(s) are safeguarded in environments or on the cybernetic infrastructure.

-

Microsoft Azure Cognitive Services Overview:

Microsoft Azure Cognitive Services is a set of artificial intelligence (AI) services for vision, speech recognition, language translation, and decision-making APIs, for example. Security is a point of great concern, and it’s one of the targets of both customer data and AI models as well.

Security Measures:

- Compliance with Regulations: Azure Cognitive Services adheres to several global certifications, including GDPR, HIPAA, and ISO/IEC. This is to ensure data privacy and security.

- Data Residence Choices: Microsoft is willing to offer some data residency options, allowing customers to decide based on their preferences the sites where they wish to have their data stored.

- Secure Model Deployment: The model is put into operation in a protected, private environment where encryption from end to end and access controls are used to block unauthorized access.

Lessons Learned:

- Compliance as a Competitive Advantage: Compliance with regulations has allowed Microsoft more leeway to play with its consumer’s fears.

- Customization and Flexibility: The option of customizability of security settings, such as the privacy settings and choices for data residency, are catered by the product and is one of the many ways in which businesses can re-adapt their security measures to fit their individual needs.

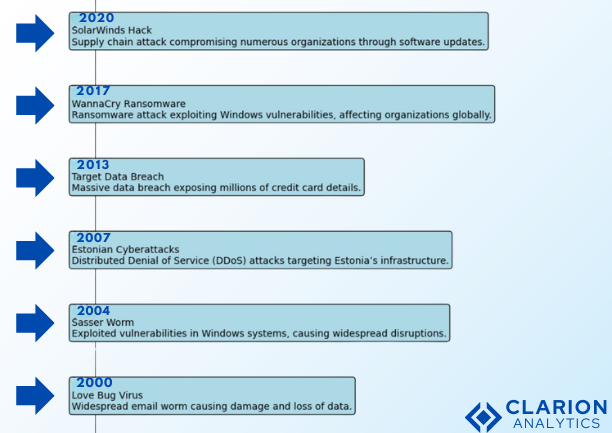

b)Security Breaches and Their Impact

-

Case: Facebook API Breach (2018)

In 2018, Facebook faced a massive data breach that had a detrimental effect on about 50 million accounts. Following the process of closing Facebook’s APIs that have had vulnerabilities, users got access tokens for their accounts and lost control because of the attackers taking them over.

Impact:

- Loss of User Trust: The situation led to a huge loss of trust among users, which went along with a bad image in the media as well as a reduction in user engagement.

- Regulatory Scrutiny: Among the problems was the one the company had with the European Union, the investigation, and the possibility of the payment of fines being in store for the company.

How It Could Have Been Avoided:

- Regular Security Audits: One way to tackle this challenge could be to carry out regular and in-depth security reviews which would allow such widespread weaknesses to be noticed early on and then patched.

- Stronger Access Controls: Building better access controls aiming at limiting what part of API permissions was a possible way to decrease the effects of the breach.

-

Case: Tesla’s API Vulnerability (2020)

In 2020, the security research community encountered a weakness in Tesla’s API that hackers could exploit to operate the car without the knowledge of the owner.

Impact:

- Potential Safety Risks: The fact that the weakness of the software could be used as a danger for car owners is indeed the peril of the software world. It makes the case of demanding enough secure APIs of IoT and connected devices.

- Reputation Damage: In spite of the fact that the vulnerability was disclosed and fixed before any exploit, the incident caused discussions around Tesla’s security measures.

How It Could Have Been Avoided:

- Input Validation and Sanitization: By applying strict validation at the input stage and checking the incoming data properly, the exploitation of API vulnerability could have been prevented.

- Bug Bounty Programs: If Tesla were to open up its bug bounty initiative by offering incentives to security researchers, the report of the vulnerability might turn out way earlier than anticipated.

Future Trends in API Security for Deep Learning

a)Emerging tools and technologies for API security:

- AI-Powered Threat Detection:

It is true that AI and machine learning get more popularity every day when it comes to increasing the security of deep learning APIs. To be more specific; these types of instruments are served with the capability of finding and responding to threats in a very short time.

- Homomorphic Encryption:

Homomorphic encryption is a cool concept because it allows for computing while the data is encrypted without requiring any information about the encryption to decrypt the data, at first. It is conventional wisdom to presume that this encryption will be the saver of deep learningAPI and particularly, in deep learning scenarios, it will be so crucial for the protection of data during processing.

- Zero Trust Architecture:

The Zero Trust methodology, which is based on the concept that no part of a network is secure and that identity and access are continuously verified, is getting standardized in deep learning API security. This method includes security testing in a CI/CD workflow along with a quick iteration method.

- API Security Testing Tools:

Every second, a new tool is developed that is designed to test deep learning API security and find vulnerabilities at the earliest stages of the developing process. These tools are quite useful for automatically scanning formerly detected flaws e.g. broken authentication or insecure data transmissions for poor API coding practices.

b)Predictions for the future of Deep learning API security:

- Increased Automation in Security Management:

Security automation will become an inevitable part of security as the deep learning models and their related deep learningAPIs become more complex. Automatic security tools will help companies monitor and enforce security policies, detect and respond to threats, and even patch vulnerabilities without human intervention.

- Making AI Privacy More Important than Ever:

Due to the stricter tailoring of data privacy rules, the privacy-preserving AI techniques will stand out as the most in-demand techniques and they will include activities like federated learning and differential privacy. The employment of these mechanisms will be such that companies can unlock deep learning models knowing that user privacy remains intact and hence boost deep learning API security.

- The symbiosis between AI and Security Communities:

The connection between AI and cybersecurity will unleash two-way contributions from two separate disciplines. Researchers and computer experts will join forces to craft new methods and tools to protect deep learning APIs which will make the security measures more sophisticated and effective.

c)Best Practices for Staying Ahead

Recommendations for keeping up with security trends:

- Continuous Learning and Training:

If you are going to work with deep learning APIs, then make sure you are aware of the latest network security threats and the best practices. These courses should be reserved for specific individuals but should be comprehensive covering safe coding, security threat models as well as the newest developed security technologies.

- Engaging with the Security Community:

Attending cyber security webinars, webinars, forums, and security roundtables may assist developers, cyber security staff, and others in learning more about the new trends as well as the obstacles they face in deep learning API security. Engaging with the larger community is the best way for us not only to get different insights but also it can bring us together to strive for a common goal of a safer internet space. This is also a great opportunity to learn from others, share knowledge, and try collaborating on solutions with cybersecurity professionals worldwide.

- Leveraging Threat Intelligence:

Getting threat intelligence services from companies will not only let you know about making threats and the like but again, help you avoid them. In-market intelligence on the threat of the emergence of new forms of cybercrime and vulnerabilities helps to create a much more comprehensive risk assessment and monitoring that provides the best chances of prevention.

d)Continuous improvement strategies:

- Regular Security Audits and Penetration Testing:

Secure coding practices and an efficient threat model are absolutely two of the most important topics that you need to cover. Security audits and penetration testing are a must to perform in-depth learning of the deep learning API vulnerabilities. The audit must be done in both ways to do the real assessment of the security posture threats of the deep learning API: in-house team and ad hoc experts.

- A DevSecOps approach:

The seamless integration of DevOps and InfoSec (DevSecOps) ensures that developers know from the start of the project that security is a critical component of the deep learning API development lifecycle. The scrum technique of developing deep learning APIs through visualized tasks and security program participation is beneficial and in the end, better and resilient deep learning APIs are developed.

- A continuous monitoring system:

In terms of the deep learning API, everything in the continuous monitoring system should be regularly and continuously tracking user behavior, API traffic, and system logs for the detection of and notification of security incidents issued within the system. Organizations need to leverage automatic detection tools, which will send alerts to the security teams and guide them to undertake counteractive actions against impending threats.

Conclusion

Securing deep learning API is a multifaceted challenge that requires careful consideration of both technical and strategic aspects. We have dug deep into the factor of the significance of deep learning API security measures, investigated the specific nature of threats that deviate from deep learning to specific models, and have brought to the table a complete guide to the best ways to secure these deep learning APIs.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us