1. Introduction

Large Language Models represent a total revolution in artificial intelligence by advancing natural language understanding together with generation capabilities. All innovation stems from the transformer architecture that revolutionized traditional models’ usage for NLP assignments while replacing RNNs and CNNs.

This article explains transformer principles before showing how to develop a basic transformer model by hand.

The guidelines serve beginners in both development and Artificial Intelligence who wish to master transformers through practical learning methods.

Image Source: Click here

2. What Are Transformers?

This transformer architecture shifted NLP operations after Vaswani et al introduced it in the “Attention is All You Need” paper during 2017. At the time before transformers LSTMs and RNNs forced sequential data dependencies while also showing poor computational performance.

Self-attention stands as the fundamental principle of this innovation that allows models to direct their focus to various sequence words no matter their present position. The transformers achieved full parallelization because of this change which led to much faster computations.

Transformers function as the fundamental element which powers all current LLMs beginning with GPT and BERT. The combination of self-attention mechanisms permits these models to achieve state-of-the-art outcomes for translating while producing summaries and answering questions as well as multiple other tasks.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

3. Understanding the Transformer Architecture

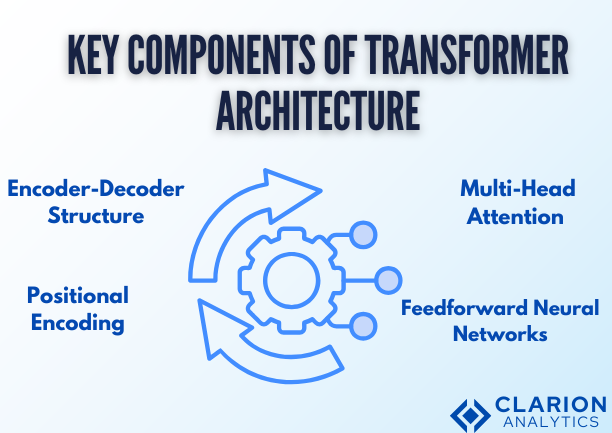

Transformers consist of several vital elements which let them interact with and interpret sequence data.

Key Components:

The input sequence gets transformed into encoded representations through the encoder module of the system. The decoder generates output sequences through the usage of such representations. Such architectural design makes transformers adaptable to translation tasks where sequences of input differ from those of output.

Through its multi-head mechanism the model actively pays attention to multiple sequence components at once. Multiple heads in attention computation help the model understand word relationships on finer levels which improves its context understanding abilities.

The intermediate information processed by feedforward neural networks undergoes transformation that introduces non-linear components for detecting complex patterns.

Positional Encoding serves as an addition to input embeddings to add sequence information about positions because transformers lack inherent sequential processing. During information processing these systems protect the preservation of original sequences.

Visual Aid (Optional):

Image Source: Click here

- Mathematical Insights (Optional):

Attention scores allow for appropriate focusing by giving higher weights to tokens to which attention needs to be given.

Image Source: Click here

4. Coding a Transformer from Scratch

- Setup:

To get started, you’ll need the following:

- Required Libraries: Python, PyTorch/TensorFlow, NumPy, etc..

- Environment Setup: Install the necessary libraries using

pip install torch numpy

- Step-by-Step Implementation:

- Create positional encodings.

import torch

import math

def positional_encoding(max_len, d_model):

pe = torch.zeros(max_len, d_model)

for pos in range(max_len):

for i in range(0, d_model, 2):

pe[pos, i] = math.sin(pos / (10000 ** (i / d_model)))

pe[pos, i + 1] = math.cos(pos / (10000 ** (i / d_model)))

return pe.unsqueeze(0)

- Define the attention mechanism.

def scaled_dot_product_attention(query, key, value):

scores = torch.matmul(query, key.transpose(-2, -1)) / math.sqrt(query.size(-1))

weights = torch.nn.functional.softmax(scores, dim=-1)

return torch.matmul(weights, value)

- Build the encoder and decoder blocks.

import torch.nn as nn

class TransformerEncoderLayer(nn.Module):

def __init__(self, d_model, num_heads):

super().__init__()

self.attention = nn.MultiheadAttention(d_model, num_heads)

self.feedforward = nn.Sequential(

nn.Linear(d_model, d_model * 4),

nn.ReLU(),

nn.Linear(d_model * 4, d_model)

)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

def forward(self, src):

attn_output, _ = self.attention(src, src, src)

src = self.norm1(src + attn_output)

ff_output = self.feedforward(src)

return self.norm2(src + ff_output)

- Combine components to form the transformer model.

class Transformer(nn.Module):

def __init__(self, num_layers, d_model, num_heads, max_len):

super().__init__()

self.encoder_layers = nn.ModuleList([

TransformerEncoderLayer(d_model, num_heads) for _ in range(num_layers)

])

self.positional_encoding = positional_encoding(max_len, d_model)

def forward(self, src):

src = src + self.positional_encoding[:, :src.size(1), :]

for layer in self.encoder_layers:

src = layer(src)

return src

5. Testing the Model

- To test the transformer, you can use a toy dataset, such as a simple sequence-to-sequence translation task. Load the data, tokenize it, and pass it through the model:

model = Transformer(num_layers=6, d_model=512, num_heads=8, max_len=100)

input_sequence = torch.rand(10, 100, 512) # Example input

output = model(input_sequence)

print(output.shape) # Should match the input dimensions

- Expected outputs should align with the input sequence length and embedding dimensions, demonstrating the model’s ability to handle sequences. However, this basic implementation may lack the refinement of pretrained models.

6. Next Steps and Applications

- This simple transformer can be used in text classification, question answering, or even machine translation. Fine-tune it on bigger datasets for better performance.

- Pretrained models like BERT, GPT, or T5 based on transformers will yield state-of-the-art performance on NLP tasks.

- For more details, refer to Hugging Face’s Transformers library or the PyTorch documentation for advanced implementations.

7. Conclusion

Transformers have become the pillars of modern NLP and drive breakthrough models and applications. This blog served as a primer on how their architecture works, plus step-by-step coding instructions on how to build one.

Also Read:

To build a strong foundation in Transformers, start with Large Language Models 101: NLP Fundamentals for Smarter AI Solutions, which introduces the core principles behind modern language models. Deepen your understanding with Attention Mechanism 101: Mastering LLMs for Breakthrough AI Performance, as attention is the key innovation that powers transformer architectures. Strengthen your fundamentals further through LLM 101: Mastering Word Embeddings for Revolutionary NLP, which explains how textual data is represented before being processed by Transformers. For practical application, Llama Fine-Tuning: Your Step-by-Step Guide demonstrates how to customize transformer-based models, while Mastering Mitigation of LLM Hallucination: Critical Risks and Proven Prevention Strategies addresses reliability challenges. Finally, The Ultimate 3 LLM Tools for Running Models Locally offers hands-on insights into deploying Transformers efficiently in real-world environments.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us