1. Introduction

Large language models rewrote the capability of artificial intelligence in NLP. It is applied to most sectors, like content creation, customer support, but also for research and data analysis purposes, and can run everything from automatic responses to customer queries to summarizing reports and even creative writing. While many are aware of the popular cloud-based models, like the GPT models from OpenAI, there are a growing number of users and organizations embracing running LLMs on the local machine that then produces several distinct advantages.

Running LLMs on a local infrastructure and not in a remote cloud-based solution can enhance the privacy of sensitive information, avoiding the placing of such data on third-party servers. Reduction in latency also comes with the network delays elimination. It increases flexibility with customizable features while lowering ongoing costs as there are no pay-per-use fees. These factors make local deployment ideal for many professionals and organizations, from healthcare providers processing information about patients to businesses wanting fast and secure customer interaction systems.

This blog elaborates on these advantages and then introduces the top three tools for running LLMs locally: Hugging Face Transformers, GPT-4 All, and LLaMA (Large Language Model Meta AI). We provide thorough discussions of key features, ideal use cases, pros, and cons for each of the tools under discussion. We then bring these products head-to-head with each other, ending with helpful tips to select a tool appropriate for your task.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

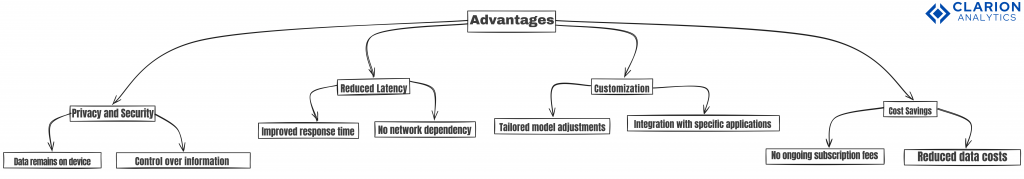

2. Why Run LLMs Locally?

Deployment of LLMs to a local environment will come with several benefits, especially taking into account the factors of increased aspects regarding data privacy and real-time applications. Considering the above points, here’s an in-depth view of the main reasons for considering deployment to a local environment:

Privacy: Increasingly, data privacy becomes an imperative requirement in the digital world, especially where losses are not merely financial but also reputational. “Data security”-whether customer details or medical records, or proprietary business information-is increasingly channeled through third-party servers via a cloud-based model, and is vulnerable to security breaches. Even with encryption, data might be vulnerable to sophisticated hacking attempts or mishandling. Local deployment ensures that all the sensitive data remains within the organization’s infrastructure thus minimizing the risks associated with it. For instance, in the case of a law firm that handles confidential records of clients, running LLM in local mode means keeping documents pertaining to a case private and not exposing them to any kind of external networks.

Lower Latency: For those applications for which high performance is critical, such as live customer support or interactive virtual assistance, low latency is essential. With cloud-based models, every interaction in these communications incurs a delay based on an internet connection and the transmission of information to and from remote servers. While small, this latency may reduce the quality of service in certain time-sensitive cases. The running of LLMs on local machines takes no latency time since model processing and response happens directly on the device.

Customization: The adaptation of running an LLM on a user’s computer directly allows for that, either through fine-tuning to give responses a certain brand voice or training on specialized datasets. Customization is largely limited in the cloud-based model because it is impossible to ensure uniform software environments and there are lock-in presets by providers. For example, a financial services company can develop a local LLM to detect finance-specific terminologies so that the customer service line can be made streamlined with responses through specialized knowledge of that industry. Thus, this benefit of local deployment offers not only better control of the model but also an opportunity to try custom features without the threat of compromising sensitive data to another party.

Cost Savings: Huge cost savings can be realized with the deployment of LLMs when large applications interact frequently or have heavy user demand. Even though cloud LLMs are on a pay-per-use or subscription model usually, local models require an initial huge investment in hardware and setup but incur fewer long-term expenses. Companies experiencing high usage of models face the rapidly expanding operational cost of cloud services as their needs scale. Deploying an LLM in-house saves an organization the recurrent costs of usage and allows budgeting for a single hardware cost. A publishing company using an LLM to assist in content edit and generation might be less costly with local infrastructure, as they avoid monthly fees to clouds.

3. Selection Criteria for Local LLM Tools

The choosing of the right tool requires technical requirements and practical constraints to be carefully assessed. Here are some of the major criteria that will guide your selection process:

Ease of Setup: The setup process can be simple or complex. Some tools have step-by-step guides, which will make the installation easier. Other tools demand specialized knowledge in machine learning or programming. For small teams and minimal technical support, documentation-rich tools, such as Hugging Face, will prove useful. Large teams who have heavy experience working with LLMs may instead depend on features, customization, and really higher-quality performance over the ease of setup.

Performance: It depends on the model architecture, optimizations, and the hardware compatibility. Some of the tools are optimized for consumer-grade hardware while others require specialized GPUs or high-performance setups to work perfectly. In order to run smoothly, the users should match their available hardware resources to the demands of the tool. For example, users who have no access to high-end GPUs might prefer models such as GPT-4 All that have been optimized for consumer hardware.

Compatibility: Along with hardware support, compatibility with an operating system is essential. Most users will be working on a particular setup and require GPU acceleration. A few tools are extremely compatible across all systems and operating systems, including Windows, Linux, and macOS, while some tools prefer to rest on specific environments. You can therefore avoid their unusual functionality after installation by checking the compatibility first.

Customization Potential: Customization varies dramatically, from high to low granularity in fine-tuning and model tuning. If application use cases require fine-tuned response or training on highly specialized data, a tool like Hugging Face is a no-brainer. But when the application is straightforward, a more simplistic tool, which also happens to be user friendly, like GPT-4 All, could suffice.

Community Support: There is a vibrant and energetic community that can be of immense assistance, from forums and user guides to communal resources. Since open-source tools tend to have massive, devoted user bases, the latter take time to maintain, upgrade, and refine the platform. Some examples include an active community at Hugging Face: It has hundreds of tutorials, hundreds of forum discussions, and third-party plugins to build added functionality to the platform.

Thus, balancing these factors, users would be able to align their needs with the right tool for optimal local LLM deployment experience.

4. Top 3 LLM Tools for Running Models Locally

Tool #1: Hugging Face Transformers

Overview: One of the highest-ranked and open-source libraries for natural language processing, Hugging Face Transformers holds a repository on multiple domains. It can support models for different NLP tasks like translation, summarization, and question-answering using pre-trained models available with the option of fine-tuning.

Key Features:

- More than 10,000 models in a library for various NLP tasks.

- Works well with the PyTorch and TensorFlow frameworks.

- Extensive documentation with many tutorials.

- Supports architectures like BERT, GPT, T5

Pros:

- Multi-model library, high customization capability.

- Large users and developers’ community and support.

- Frequent updates and integration with popular ML frameworks.

Cons:

- The hardware requirements are relatively higher, particularly if they have larger models

- This library is highly dependent on experience with NLP

Recommended for those developers who are searching for a complete NLP tool having fine-tuning capabilities. It is most suitable for those applications which require high customization, for example, projects involving complex and domain-specific languages.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Tool #2: GPT-4 All

Overview: The GPT-4 All is an accessibility-focused project that makes an accessible path to local LLM deployment. With a privacy and simplicity framework, GPT-4 All is optimized for local infrastructure where the models are made efficient with minimal complexity in setup.

Key Features:

- Light model structure, reducing needs for hardware.

- Local-first and ensures user privacy.

- Easy setup while installing.

Pros:

- Low hardware requirements.

- Privacy-focused: all processing happens locally.

- User-friendly and accessible for new LLM users.

Cons:

- Has a relatively limited model selection and functionality.

- It lacks some customization options that are available on larger platforms.

GPT-4 All is good for users who desire privacy and ease. This is suitable for applications requiring average NLP capabilities, like minimal configuration, to include simple customer service automation and basic data processing.

Tool #3: LLaMA (Large Language Model Meta AI)

Overview: LLaMA is an open-source language model by Meta AI for advanced NLP research and high-performance applications. Though it requires strong hardware support, LLaMA has robust performance that makes users with academic and research-oriented backgrounds its fan.

Key Features:

- Research-oriented, Deep experiment capabilities

- Strong customization support to match specific scenarios

- Advanced features of NLP for large-scale processing

Pros:

- State-of-the-art performance for complex tasks

- Deep customization and fine-tuning

- There are active research communities improving and extending the model

Cons:

- High demand for hardware, usually demanding multiple GPUs

- Installation is complicated and may not attract new users

Best for Research institutions, universities, large companies with high computational power, especially when required to run large-scale computations on complex NLP tasks and high-performance applications fine-tuned.

5. Comparison Table of the Top 3 Tools

| Feature | HuggingFace Transformers | GPT-4 ALL | LLaMA |

| Setup Ease | Moderate | Easy | Moderate to Complex |

| Performance | High | Moderate | High |

| Compatibility | Wide Range | Wide Range | Required high-performance hardware |

| Customization | Extensive | Limited | Extensive |

| Community Support | Strong | Moderate | Growing |

| Best Use cases | Diverse NLP Tasks | Privacy-focused projects | Research and large-scale NLP |

| Pros | Large model library | Lightweight and private | High performance and flexibility |

| Cons | Hardware demands | Limited model options | High hardware requirements |

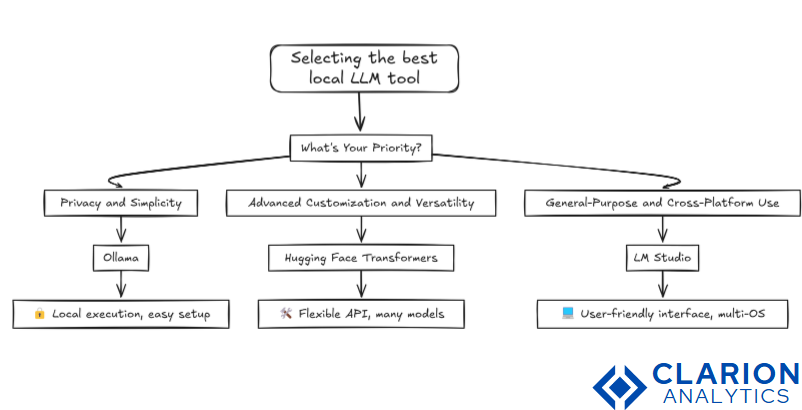

6. Choosing the Right Tool for Your Needs

Here is how to choose the right local LLM tool based on your privacy needs, performance requirements, and hardware capabilities. This guide will help align each tool with common user scenarios:

- There is GPT-4 All for those who give high regard to their privacy. This was made to be local-first to fit sensitive data inside the control of a user, especially in healthcare and finance sectors.

- This library is vast in customization and therefore is apt for complex applications and tasks in NLP that require fine-tuning of models. Since it allows support for various models and frameworks, the usage here will be closely tailored according to specific requirements.

- High performance applications LLaMA performs fantastic when you have the power to process available high performance. It best applies itself to large data set analysis and complex processing suited to research or academic users that need scalability and flexibility; exactly what LLaMA offers.

Pay careful consideration for your application use case, budget, and also your technical resources, and you’ll be quite sure that you’re choosing the perfect LLM to go with local deployment.

7. Conclusion

Running LLMs locally offers a number of benefits, particularly for users concerned with data privacy, response times, and customization. For highly extensive NLP capabilities and model adaptability, Hugging Face Transformers are ideal; for lightweight, privacy-focused applications, GPT-4 All is necessary; and LLaMA has good performance for large-scale research. And with the right tool, consumers will be able to tap the full power of local LLMs and unlock a wide range of innovative and secure AI solutions.

Also Read:

To get the most out of any LLM setup, it’s important to build a strong foundation with Large Language Models 101: NLP Fundamentals for Smarter AI Solutions, which explains how modern language models work. From there, Mastering Transformers: Unlock the Magic of LLM Coding 101 and Attention Mechanism 101: Mastering LLMs for Breakthrough AI Performance dive into the core architecture and mechanisms that power intelligent text generation. For practical customization, Llama Fine-Tuning: Your Step-by-Step Guide shows how to adapt an LLM for domain-specific tasks, while Mastering Mitigation of LLM Hallucination: Critical Risks and Proven Prevention Strategies addresses reliability and trust in real-world deployments. Finally, Agentic AI vs Generative AI: Unleashing the Future of Intelligent Systems provides a broader perspective on how LLM-driven systems fit into the evolving AI landscape.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us