Introduction

The phenomenal growth of AI brought conversational agents in various forms and shapes of chatbots to be picked in almost every area from customer service to lead generation. However, the next generation of chatbots is no longer about being mere answer-tellers, it has more to do with specific, contextual, and knowledge-based responses that add value to users.

This tutorial is used to develop a knowledge-based chatbot using LangChain, an innovative framework which simplifies the development of applications powered by large language models. LangChain makes it possible for developers to use advanced language models and leverage them with a custom knowledge base to deliver very consistent and robust results. By this, decision-makers will be able to make direct use of such bots for enhancing user experience as well as automating day-to-day operation tasks.

Whether you are a developer or CXO, this guide will give you crucial insights as to how LangChain can be leveraged to build and optimize your knowledge-based chatbot. By the end of this guide, you’ll be able to structure a knowledge-based chatbot that leverages external knowledge sources and provides tailored responses based on your industry needs.

What is LangChain?

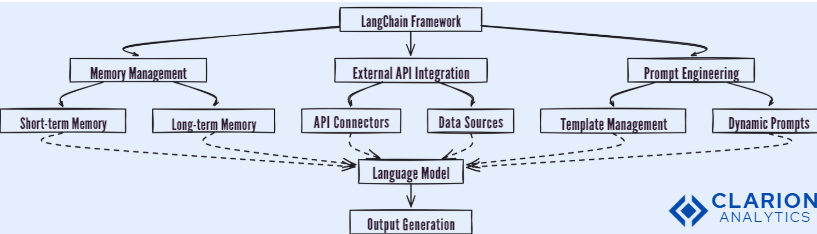

LangChain is a framework where one can build applications based on large language models, which go beyond simple text generation to more complex applications. Most LLMs can perform text generation or other tasks depending on the input received from the user but LangChain goes that extra mile by incorporating these more complex applications with external knowledge sources while allowing features that deal with conversational memory, prompt engineering, and a lot more.

At its core, LangChain allows developers to build applications that deeply connect with data. For instance, in the case of the LangChain-powered chatbot, you can send a query to a proprietary knowledge base or connect to external APIs to fetch real-time data, which will make it infinitely more functional than the traditional chatbot. It is almost as if you’re giving your chatbot a brain that can reason, fetch the right information, and recall previous experiences.

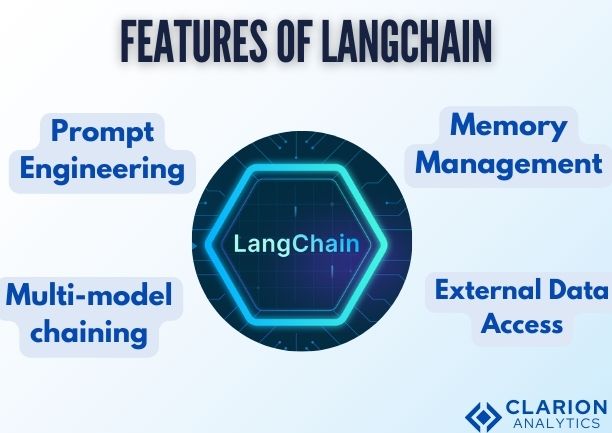

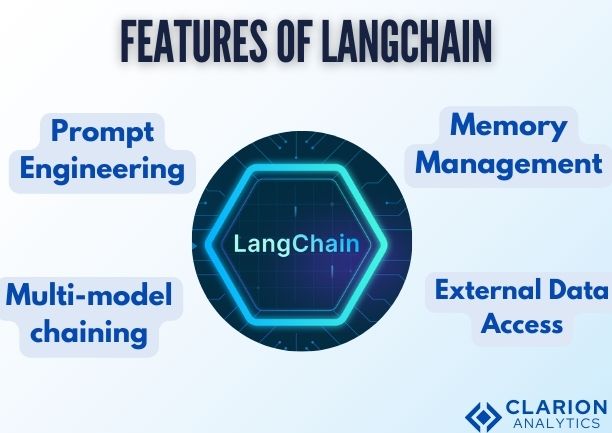

Some of the most useful features of LangChain are :

- Prompt Engineering: You’d get more contextually accurate answers from your model when you manipulate how questions were asked or how data was retrieved. Critical for any domain-specific chatbot where answers need to be precise.

- Memory Management: You can implement memory mechanisms on LangChain. So for instance your chatbot would remember what question was asked or even what conversation thread, which would help you have more fluent coherent dialogues.

- Multi-model chaining: LangChain will let you chain multiple models or tools together. You can route user inputs through different LLMs as the complexity of the request may require or even through external APIs.

- External Data Access: You can connect your chatbot to external APIs or proprietary databases. This is useful for use cases where real-time data must be provided or when information in specific datasets needs to be pulled.

- It can process large volumes of unstructured knowledge sources, parsing documents and extracting text, even to summarization; therefore, making it easier to digest and apply varied forms of data when training the chatbot.

- These features make LangChain not only a tool for developing chatbots but the whole framework for developing applications relying on complex language models and large knowledge sources.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

why Use LangChain for Knowledge-Based Chatbots?

A knowledge-based chatbot is quite different from a deployment of a simple chatbot architecture. It should understand and make informed responses on domain-specific information. LangChain really shows it here well.

Benefits for Developers

Now, the bar has been shifted not only to develop the question-answering type of chatbots but also to comprehend even deeper questions. Knowledge-based chatbots interpret the questions, fetch relevant information, and do this rapidly. The following is why life gets a bit easier with LangChain :

- There are pre-built modules; LangChain is module-based in nature, and that’s what allows the developer to pick and choose what they want-including and excluding the engines within prompt engineering, memory, and data ingestion. This saves them a ton of time to instead build a working chatbot quickly.

- Seamless API Integrations: LangChain makes integrations with external APIs easy, so developers can nudge the knowledge base of their chatbot beyond static documents. Connect to a financial database for real-time stock data or make an internal CRM throw out information-it’s all simple.

- Scalable Easily: LangChain lets you begin small with a simple bot architecture, and scalable architecture as the complexity of the chatbot builds. The more features you add, the more complex you can make it, which makes it ideal for iterative development cycles.

Benefits for CXOs.

For decision-makers, the development of a knowledge-based chatbot can have a significant impact on operations and customer experience. LangChain offers several strategic advantages:

- Improvement in User Experience : With an appropriate design of chatbot, the user request is responded to with context-sensitive answers promptly. Thus, your customers are satisfied and contact human agents less for routine enquiries.

- Operational Efficiency : Any tasks related to answering FAQs, customer service inquiry, or even data entry can be efficiently automated with a LangChain-driven chatbot, which helps save your organization time and resources.

- Custom Solutions: LangChain is highly customizable to the needs of your business. Whether it is an internal solution, where it can be a portal through which employees can view internal documents, or a customer-facing interaction, the framework is customized and geared toward your particular business.

Step-by-Step Guide to Building a Custom Knowledge-Based Chatbot

Having read all about the advantages of LangChain, let us now try to build your very own chatbot. Here’s a step-by-step guide on creating a custom knowledge-based chatbot using LangChain:

- Creating the Environment

First and foremost, the setting up of the right kind of development environment is necessary to build a chatbot. To begin with, you’ll need the following:

- Python Environment: Since LangChain is Python-based, make sure you have Python 3.x installed. Set up a virtual environment to manage dependencies using `venv` or `conda`.

```

python -m venv langchain_env

source langchain_env/bin/activate # Linux or Mac

.\langchain_env\Scripts\activate # Windows

```- Install LangChain: You can install LangChain by running:

“`

pip install langchain

“` - Install OpenAI API or Hugging Face Transformers: Depending on your language model, you might want to integrate GPT (from OpenAI) or Hugging Face’s models. Use the following commands:

“`

pip install openai

# OR

pip install transformers

“` - Additional Libraries: You may also need libraries like Flask for building a web interface or `requests` for handling external API calls:

“`

pip install Flask requests

“` - Designing the Chatbot Architecture

Architecture design is crucial in making the chatbot scalable and preserving the flow of the conversation while ensuring that correct responses are given.

- Structuring the Knowledge Base and Conversation Flow is the first step :

- Setting up the Knowledge Base: A knowledge-based chatbot heavily depends on the sources of information that are available to it. It can be static, as in documents or it can be a database or even dynamic, in some APIs. These can be structured in the ways described next:

- Static Knowledge Base: Store documents like manuals, policies, or guidelines that a bot can refer to.

- Dynamic Knowledge Base: For instance, include the API which fetches real-time stock prices or medical records according to the questions asked by the user.

- Conversation Flow: Design conversations using LangChain’s module for managing memory. You might need to design a conversation that gains experience across multiple interactions. That is very important as far as a personal, context-aware experience goes. For example, a customer asks how long the warranty is on a product, and the chatbot later refers back to this question when recommending similar products or services.

- Training the Chatbot with Domain-Specific Data

A general-purpose chatbot cannot be used for any domain-specific areas, such as health care, finance, or legal services. Populating the chatbot with domain-relevant data ensures that the response is accurate and applicable to that specific domain.

- Data Collection: First, start collecting relevant datasets, documents, or APIs that would be your chatbot’s knowledge base. For example, in finance, you could use regulatory documentation, financial statements, or even real-time stock data as a basis of knowledge.

- Data Preprocessing: The data that the system encounters has to be transformed to machine-readable formats, such as extracting PDFs or HTML files. To do this, preprocess the data by doing the following:

- Text Cleaning: Remove any irrelevant characters, symbols, or unwanted spaces

- Tokenization: Break the text into tokens (words or phrases) for the language model.

- Embedding: Convert a document into vector embeddings so that the chatbot would later retrieve the appropriate information in a more efficient manner.

- Model Refinement: Refine a pre-trained LLM, like GPT, on your dataset. This will ensure that the chatbot answers appropriately for queries specific to the industry for which you developed it. You can easily integrate fine-tuning techniques with LangChain, as available on platforms such as Hugging Face.

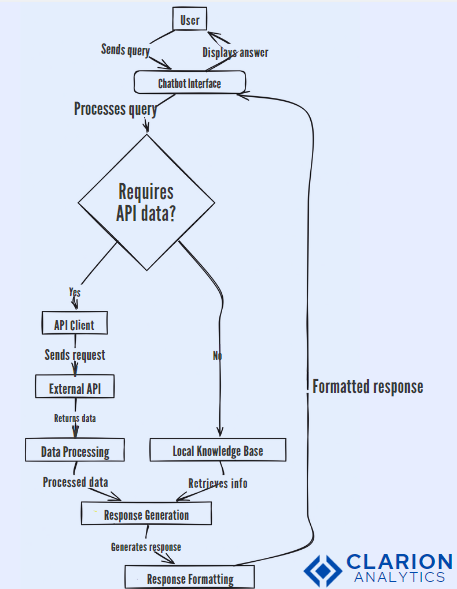

- Integrating LangChain with External APIs

One of the strong points in LangChain is the fact that it can interface with external knowledge sources via APIs. This makes the functionality of the chatbot more enhanced since it will provide real-time information or fetch data from proprietary databases.

- Select Your APIs: Depending on your use case, determine what the chatbots will use. For example:

- A financial chatbot may wish to connect to an API that would provide them with a live feed to the stock exchange.

- A healthcare chatbot can simply query a medical database to answer questions posed to it by patients.

- Calling APIs: Using Python’s requests library to call an API. In this example you can connect a stock price API like in the following

“`

import requests

def get_stock_price(symbol):

url = f”https://api.example.com/stocks/{symbol}”

response = requests.get(url)

return response.json()

“`

- Parsing responses from APIs: As soon as the APIs return their data, LangChain can help parse and format the information that it receives into a conversational response. This becomes very useful when the data provided by an API needs simplification in order to explain it to users

- Incorporating API Results in Conversations:An interesting feature of LangChain is that it allows the invocation of API results right into the flow of a dialogue. So if a user asks for the price of a specific stock, the chatbot could return the answer with some appreciable timing by querying the stock price API.

- Testing and Evaluating Chatbot Performance

A chatbot is built, and that is only the first part of it. For it to work the way it should, thorough testing and evaluation are needed. This becomes especially vital for knowledge-based chatbots, whose responses in terms of precision and relevance must be accurate.

- Simulated user interactions: Simulate conversations to mimic real scenarios. This is achieved when test scripts walk the chatbot through a series of typical user interactions.

- Evaluation Metrics:

- Response accuracy: How often does the chatbot give the right answer to the user’s queries?

- Latency : Measure how long it takes for the chatbot to respond back. This is particularly important when external APIs are involved.

- User Satisfaction Scores: Implement measures where you gather ratings from users after a specific interaction session. This may enable you to gain incredible insights into the performance of the chatbot.

- A/B Testing: Conduct A/B tests where the user will be interacting with different variations of the same chatbot to determine which version performs better. You can use this in testing functionalities like conversation flow, speed, and user satisfaction.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Advanced Techniques for Optimizing Chatbot Performance

Now that you have a working chatbot, optimization of performance is the next step. LangChain enables several techniques that can be utilized to highly optimize how your chatbot behaves.

We can use Reinforcement Learning, Transfer Learning, and Optimizing Response Time for this :

- Reinforcement Learning (RL): With RL, your chatbot will learn through interactions with users. You send signals to the chatbot in the form of rewards or penalties, which the chatbot uses to adapt and thereby improve over time. The user, for example, rates a response poorly; then the chatbot learns not to offer a similar response the next time.

RL will be used in LangChain by implementing an interactive training loop: train the model on interactions with the user, fine-tune itself in real time based on user inputs.

- Transfer Learning: LangChain is all set to support transfer learning, for fine-tuning a model trained in one domain to be used in another. This is helpful in moving from a general-purpose chatbot to a domain-specific one. For instance, you could take a general GPT model, fine-tune it for legal document processing, and save many hours and efforts.

- Optimizing Response Time: Users would expect immediate responses, and slow response times are agonizing. Optimize LangChain by:

- Caching Frequently Occurring Queries: Store questions frequently asked along with answers in memory to save time processing similar queries in the future.

- Edge Computing: Deploy models in the edge or make use of cloud-based solutions for reduced latency and enhanced response times.

This will also ensure that critical API responses get preloaded and then kept in cache, thereby reducing one’s reliance on real-time API calls to enhance user experience.

Common Challenges and How to Overcome Them

A robust knowledge-based chatbot brings its share of problems. Here are some common ones and how to overcome them effectively :

- Data Bias: Of the many challenges that LLMs bring with themselves, there is perhaps one that is most bothersome-data bias. If your chatbot is developed using biased data, it will be biased in its responses and can even be offensive at times. To fight against data bias, one should:

- Diverse Datasets: The training dataset must be diverse in its viewpoints, locations, and culture.

- Regulatory Monitoring: Periodic audits on the chatbot’s response to check on ethics levels.

- Model Fine-Tuning: This is periodically fine-tuned with new, unbiased data to keep it up to date and more balanced

- Scalability: As your chatbot grows in popularity, handling increased user requests can be challenging. To ensure your chatbot scales efficiently

- Cloud Deployment : It will deploy on AWS Lambda or Google Cloud Functions so it will auto scale according to the number of users.

- Load Balancing: Using balancers so all queries across different instances of the chatbots.

- Database Optimization : Ensure your databases and APIs are optimized to allow multiple requests to be processed in different places without causing any kind of bottlenecks.

- Security Issue: If the chatbots are to be used for accessing sensitive information, then security pops up in the healthcare or financial sectors. Security can be decreased by the following measures:

- Data Encryption: All sensitive data should be encrypted both in transit and rest.

- Authentication and Authorization: Multi-factor Authentication along with role-based access control so that only appropriate people to whom accessibility should be given to the chatbot and sensitive data should be provided.

- Industry regulation: GDPR for data privacy and HIPAA for healthcare are some of the rules industry itself is mandated to follow.

Real-World Applications of LangChain Chatbots

There are already applications of LangChain-driven chatbots in the different realms. Let’s review some of these real-world applications and suggest what is to come next: future trends for LangChain-driven chatbots.

- Industry Use Cases and Future Trends

- Healthcare: With the advent of chatbots in health care, patient engagement is undergoing a paradigm shift. For instance, chatbots can book appointments, share information regarding medicines, or facilitate chronic disease management. Upon integrating into EHR and real-time information, the chatbot will be very helpful to both healthcare providers and patients.

For example, a hospital deploys a LangChain-powered chatbot to assist patients in navigating to appropriate departments based on their conditions, book appointments with doctors, and answer frequently asked questions about insurance and billing.

- Finance: In today’s financial world, the greatest beneficiary of knowledge-based chatbots is the finance sector. They can provide real-time data on finance. They can also offer customized financial advice and even guide customers through banking services that have become complex.

For example, a bank engages a LangChain chatbot, which provides the consumer with precise and up-to-date information they require concerning stock prices, breaking financial news, and individual advice on a user’s portfolio.

- Retail and E-commerce: For retail, the retail market could engage chatbots to enable customers to find products, track orders, and make personalized recommendations based on purchases.

For example, an e-commerce website integrates LangChain chatbots in its system to help users search thousands of products and provide recommendations for other products and answer the questions people have about the returns.

- Future Trends

The future of LangChain chatbots appears pretty bright with the recent advancements happening in AI and NLP technologies:

- Integration with IoT: Soon, LangChain-based chatbots would be able to interact with Internet of Things devices, for example a smart refrigerator, which will list recipes based on the ingredients available.

- Virtual Assistants: With the power to deal with complex workflows in complex workflows of Latte, it would bring much more sophisticated, context-aware conversations to virtual assistants like Alexa or Google Assistant.

- Decision Making Support: This will make the chatbots not only give information but also aid in the decision-making processes involved, in industries like law, healthcare, and finance. For example, it might assist lawyers in the courtroom trials by referring them to similar cases and laws relevant to the trial happening at the time.

Conclusion

Through this guide, we have explored how you can use LangChain to build robust, custom knowledge-based chatbots. Whether a developer looking for ease in the process of development or a CXO in search of means for betterment for your customers’ interaction, LangChain is a treasure trove of features to be chosen. Features include its modular architecture, it’s easy to integrate with APIs, and it has great language model capabilities, making chatbot development within everyone’s reach-make it more accessible and scalable, doing so in greater efficiency.

As we’ve learned, knowledge-based chatbots have wide-ranging applications across healthcare, finance, and retail, and more. While optimizing response accuracy, utilizing domain-specific data, and using external knowledge sources in the design of chatbots makes these more than just an automation tool for customers and employees, it provides businesses with the opportunity to experience actualized application.

Also Read:

To fully leverage the potential of LangChain, it’s essential to build a strong foundation with Large Language Models 101: NLP Fundamentals for Smarter AI Solutions, followed by a deeper dive into Mastering Transformers: Unlock the Magic of LLM Coding 101 to understand the architecture powering modern AI systems. Expanding further, Attention Mechanism 101: Mastering LLMs for Breakthrough AI Performance uncovers the core mechanism that drives contextual intelligence in language models. For practical implementation, Llama Fine-Tuning: Your Step-by-Step Guide provides hands-on insights into customizing models for specific use cases, while Autonomous Agents Accelerated: Smarter AI with the Model Context Protocol explores how intelligent agent systems enhance dynamic workflows. Finally, Mastering Mitigation of LLM Hallucination: Critical Risks and Proven Prevention Strategies addresses one of the most critical challenges in deploying reliable AI applications built with LangChain.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us