Introduction

- Large Language Models(LLMs) have revolutionized NLP by providing a more accurate and contextual prediction, translation, or generation of text.

- Notably, the success is mainly attributed to the attention mechanism, since it enables models to determine and concentrate on the most relevant part of the input data.

- It have become integral in modern deep learning techniques applied to NLP and have shown large improvements over a wide range of tasks.

What is an Attention Mechanism?

- The It in neural networks makes the model selectively focus on those parts of the input data that are most pertinent for the task at hand. Unlike giving equal treatment to all input parts, assigns different elements with a weight based on their importance.

- The traditional models, for example, RNNs, usually failed to handle long-range dependencies and context understanding.

- It handle such challenges by dynamically prioritizing information, therefore increasing the efficiency and accuracy of the language models.

The Evolution of Attention Mechanisms

- It were created to solve the limitations of older models like RNNs and Long Short-Term Memory (LSTM) networks. The Seq2Seq model with attention, introduced by Bahdanau et al., was one of the landmarks that enabled the translation of languages with increased contextual understanding. The Transformer architecture further built upon this innovation by replacing recurrence with attention as the core mechanism for dealing with sequential data.

- Transformers, in the paper “Attention is All You Need,” introduced self-attention and multi-head attention mechanisms that would set the stage for BERT, GPT, and T5.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Types of Attention Mechanisms

- Global Attention: It examines the entire sequence of input and then determines how relevant it is. This strategy makes sure one has a whole understanding of context but is also computationally costly, especially with long sequences.

- Local Attention: Local attention uses smaller segments in the input sequence to balance computation efficiency with contextual relevance. Its usefulness is great where a limited context window is needed.

- Self-Attention: Self-attention allows tokens in a single sequence to interact with each other by computing the attention weights between pairs of tokens. This is the basis for the Transformer architecture, which makes it possible to process sequential information efficiently.

- Multi-Head Attention: Multi-head attention applies multiple attention mechanisms in parallel, where each mechanism discovers different relationships in the data. This multi-headness helps the model capture more complex patterns and dependencies.

How Does Attention Work?

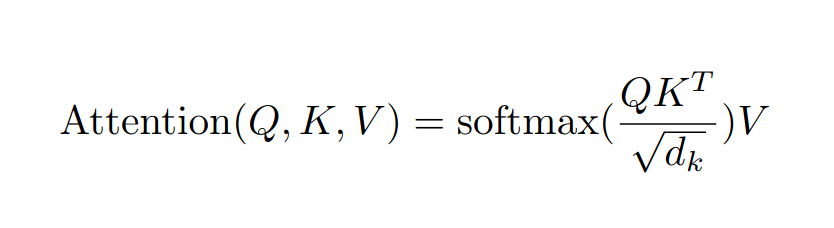

- The attention operates through the Query, Key, and Value (QKV) framework:

- Query (Q): Represents the current input token.

- Key (K): Represents all input tokens.

- Value (V): Represents the information associated with each input token.

(Source: Click here)

- The attention weights are computed by taking the dot product of the Query and Key, followed by a softmax operation to normalize the scores. The weighted sum of the Value vectors, determined by these attention weights, provides the context-aware representation.

The Importance of Attention in LLMs

- It allow LLMs to understand context, handle long-range dependencies, and generate coherent outputs. This makes the model particularly good at tasks such as:

- Text Summarization: Generating summaries by identifying important parts of the text.

- Question Answering: Identifying the relevant information in a passage to answer certain questions.

- Language Translation: Keeping context across sentences for the right translations.

- Self-attention in LLMs also supports parallel computation, so large datasets can be processed simultaneously in an efficient manner.

Applications of Attention Mechanisms in NLP

- Attention mechanisms have transformed NLP applications, including:

- Machine Translation: Models like Google Translate use attention to perform context-aware translations.

- Text Generation: GPT models use attention to generate coherent and contextually relevant text.

- Sentiment Analysis: Attention improves sentiment prediction by concentrating on the sentiment-bearing words.

- Popular LLMs such as GPT, BERT, and T5 extensively use it to achieve state-of-the-art performances.

Challenges and Limitations of Attention Mechanisms

Despite their advantages, there are some issues :

- Computational Costs: Quadratic complexity of attention calculation limits scalability for very long sequences.

- Overfitting: Models can overfit smaller datasets when attention weights become overly specialized.

- Efficiency: It is still an open challenge to handle large datasets efficiently, often leading to innovations such as sparse attention or memory-efficient transformers.

Future of Attention Mechanisms

- The future of the mechanisms lies in overcoming these challenges and exploring new frontiers.

- Efficient Attention Mechanisms: Techniques such as linear attention and sparse attention are proposed to lower the computational complexity.

- Multimodal System Integration: The integration of attention with vision and audio inputs in a multi-modal learning setup.

- Reinforcement Learning Integration: Exploiting attention in decision-making tasks to enhance policy learning.

- As AI research advances, attention mechanisms will likely evolve to become more efficient and versatile.

Conclusion

- The emergence of mechanisms has changed the face of NLP by making it possible for models to concentrate on relevant information, handle long-range dependencies, and process data efficiently. Such integration into LLMs has led to considerable advancements in translation, summarization, and text generation tasks.

- Attention mechanisms are going to remain at the core of AI and deep learning advancements in the near future.

Also Read:

To fully understand the power of the Attention Mechanism, it’s essential to explore Mastering Transformers: Unlock the Magic of LLM Coding 101, where attention forms the backbone of modern transformer architectures. Complement this with Large Language Models 101: NLP Fundamentals for Smarter AI Solutions to build a solid conceptual foundation, and LLM 101: Mastering Word Embeddings for Revolutionary NLP to see how embeddings interact with attention to generate contextual meaning. For practical implementation, Llama Fine-Tuning: Your Step-by-Step Guide demonstrates how attention-based models can be customized for domain-specific tasks. Ensuring reliability is equally important, which is addressed in Mastering Mitigation of LLM Hallucination: Critical Risks and Proven Prevention Strategies. Finally, Agentic AI vs Generative AI: Unleashing the Future of Intelligent Systems places the Attention Mechanism within the broader evolution of intelligent AI systems.

Are you intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us