Computer Vision models is a field of artificial intelligence (AI) that enables machines to interpret and make decisions based on visual data. Rising from simply image processing methods, it is now a complex branch that can imitate the human ability to see and understand images and videos. This shift is mainly due to the spread of computational power, better algorithms, and more massive datasets, which resulted in the creation of models that are no less capable than most of the tasks that were previously once thought to be exclusive to human intelligence. Thus, Computer Vision models has slowly progressed.

This blog will be a tour through The History of Computer Vision Models , starting from the primary pixel-based techniques to the current sophisticated perception models. We will also enumerate the milestones, breakthroughs, and challenges that have sculpted the field and discuss the state-of-the-art technologies that are reshaping the boundaries of Computer Vision models .

The Early Days: Pixel-Based Image Processing

Foundations of Image Processing

The beginning of the computer vision models field was achieved through the use of pixel-based image processing. The initial mission was to understand and analyze the images from the lowest level: the pixel. In short, images are nothing more than a matrix of pixels, and the first Computer Vision models were trying to derive important information based on these matrices.

At that time, the main concern was the genuineness of such techniques that would help the machine get a better understanding of images. Methods like these were dependent on mathematical manipulations of the pixel values in the image. For instance, the grayscale images were converted into binary images by the thresholding operation so that each pixel had either black or white color, based on a predefined threshold. This approach, which is a sort of simplification, made it more convenient for the early algorithms to recognize the patterns and characteristics in the images.

Key Algorithms and Techniques

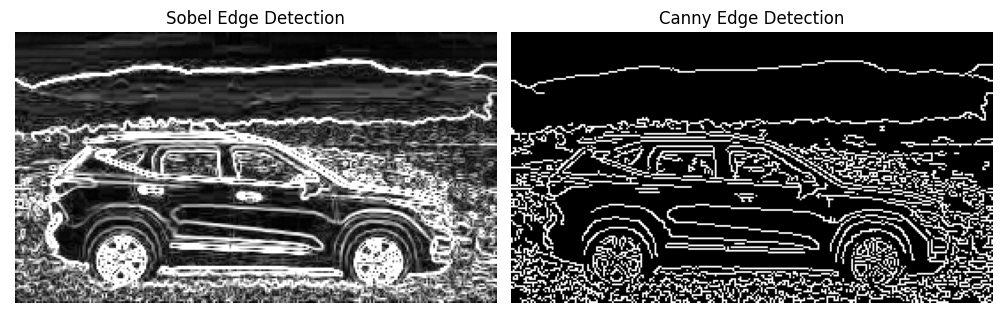

Several crucial algorithms were used for this task at first, and they became the building blocks of the early Computer Vision models. Among the most famous were the Sobel and Canny edge detectors.

- Sobel Edge Detector: The Sobel operator, which was first designed in 1960, is one of the earliest and most widely used edge detection techniques. To do this, a pair of convolution masks (kernels) is applied to an image to estimate the gradient of the intensity. The resulting gradient magnitude is a measure of the strength of edges, or how much they stand out, while the gradient direction gives information about their orientation. A simple yet effective approach is Sobel’s convolution which makes it a seminary mathematical pattern in Image Processing.

- Canny Edge Detector: The Canny edge detector, the introduction of which was by John F. Canny in 1986, is a significant improvement of the strategies that existed before. It is a multi-level algorithm that first processes the image by using a Gaussian mask for smoothing, next, it finds the edge gradients, then applies non-maximum suppression to thin the edges, and last, applies double thresholding and edge tracking by hysteresis to detect the most relevant edges. The Canny edge detector differs from the rest, it is a preferred method of edge detection for the main reason that it is the only one, so far, that detects the true edges while minimizing false positives.

Computer Vision models are more aware of the shift from pixel-based techniques to the hierarchical model. By working with images in their most basic form – pixel by pixel, scientists have come up with intelligent methods of visual data understanding and recognition, making future models both stronger and more elastic.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

The Rise of Machine Learning in Computer Vision models

The Advent of Feature-Based Models

Rather than focus on individual pixel images, a new approach called machine learning was introduced to Computer Vision models . This allowed developers to build better models by adding texture extraction features in images. Two of the most influential methods developed during this time were SIFT (Scale-Invariant Feature Transform) and HOG (Histogram of Oriented Gradients).

- SIFT (Scale-Invariant Feature Convert): Firstly presented by David Lowe in 1999, SIFT is a technique for assigning the characteristic points that are responsible for the background and thus, giving discrete information about every feature that appears to be effective in the process of comparing visual images against the ocean wave tides. It is basically used to recognize different images in various orientations and has a scale-invariant property. SIFT functions by identifying the key points of the image and describes the characteristic points in a scale, shift, and rotation reduction (SRAT) way. The capability to SIFT match features across the different images was a true technological success in the development of computer vision models.

- HOG (Histogram of Oriented Gradients): HOG is a technique proposed by Navneet Dalal and Bill Triggs in 2005, which has been widely adopted and is used in Computer Vision models . HOG is implemented to a photo by splitting it into cells of the same size and then each cell is used to create a histogram of the direction of the gradients within the cells. These histograms are then summed to produce a feature vector for the whole image. HOG is a great tool for the task of object detection from images because it not only can survive data with weak gradients but also resists noise and is the base of many systems of the modern world including road user detection, robot vision, and other image recognition technologies.

Support Vector Machines and Their Impact

Because of its popularity, soon feature-based models became the standard choice for image classification tasks along with Support Vector Machines. SVMs are supervised learning algorithms working by finding the optimal hyperplane separating data points of different classes. Relating this to computer vision models , SVMs were used to classify images based on the features obtained by algorithms such as SIFT and HOG.

SVMs had a lot of advantages over the previous methods. They could process data of high dimensionality, so they were quite apt for image classification tasks. Besides, SVMs provide a robust and accurate way for image classification, even in the presence of noise or with classes that overlap. The combination of feature extraction techniques such as SIFT and HOG with SVM appeared as a radical advancement in developing Computer Vision models.

The Deep Learning Revolution

Introduction to Convolutional Neural Networks (CNNs)

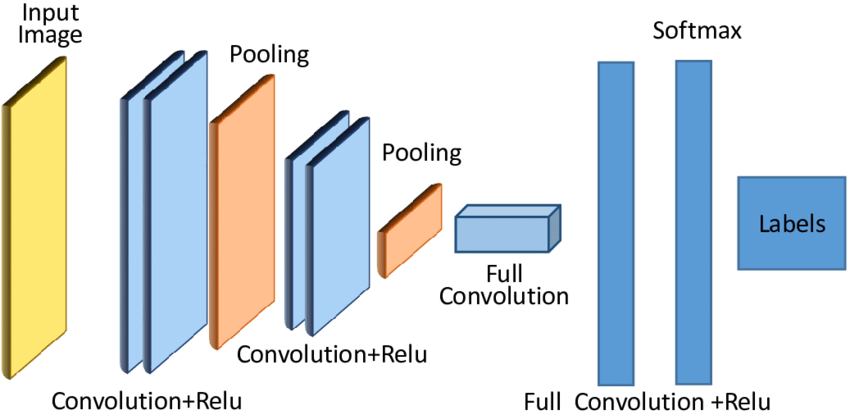

The real revolution in Computer Vision models happened with the advent of Convolutional Neural Networks. They correspond to one of the categories of deep learning techniques designed especially to process data having grid-like structures, such as images. In contrast to conventional machine learning algorithms that require hand-designed features, CNNs automatically learn hierarchical representations of features from raw image data itself.

Probably the most important innovation of CNNs is their use of convolutional layers that apply a set of filters to an image in order to detect local patterns, such as edges, textures, and shapes. After being detected, these patterns are combined in further subsequent layers into higher-level features, allowing the network to recognize complex objects and scenes.

CNNs also involve pooling layers that down-sample the spatial dimensions of the feature maps, making the model computationally more efficient by retaining only the most important information. Then, there are fully connected layers at the end of a CNN that actually do the classification based on the learned features.

Milestones: AlexNet, VGG, and ResNet

It was this type of rise for CNNs in computer vision models , accompanied by a variety of milestones that helped to move the state-of-the-art metric both in accuracy and efficiency.

- AlexNet (2012) — Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton developed AlexNet, the very first CNN that broke ground in ILSVRC. AlexNet contains eight layers: five convolutional layers and three fully connected layers. AlexNet significantly outperformed previous models and reduced the top-5 error by more than 10 percent. This result truly demonstrated the power of deep learning and launched wide interest in CNNs for Computer Vision.

- VGG, 2014: Karen Simonyan and Andrew Zisserman worked on a simpler architecture, going deeper, and developing the field further. This was named VGG-16 and VGG-19 based on the number of layers: 16 and 19. It uses small, 3×3 convolutional filters that are convolved sequentially to create deeper networks with increased expressiveness while ensuring computational efficiency. This made VGG pretty popular because of its overall simplicity and effectiveness on various different computer vision tasks.

- ResNet, 2015: The approach was proposed by Kaiming He et al. for solving one of the biggest challenges of that period: training very deep networks. In this way, residual connections skipped some layers and added the output directly to the input. This architecture made it possible for ResNet to train networks containing hundreds or even thousands of layers without a vanishing gradient problem. On the heels of its success in ILSVRC 2015 with state-of-the-art results, with top-5 errors as low as 3.57%, ResNet cemented itself as one of the pillars of modern deep learning.

Advanced Architectures and Techniques

As deep learning matured as a field, more and more powerful architectures and techniques were developed that allowed for ever-more complex tasks in Computer Vision models.

YOLO and Real-Time Object Detection

YOLO (You Only Look Once), a program initially developed by Joseph Redmon and the team, brought the concept of low latency mode into object detection by providing real-time speeds. YOLO, which does not use the insert region proposal networks (RPNs) that retrieve boxes intelligently, but are actually the detector with a classifier and bounding box regressor, is a single regression technique for the whole object detection process.

It is this technique that allows YOLO to work with images in just one pass, which results in real-time speeds but nevertheless, with high accuracy. The practicality and the quality of the output have turned YOLO into a favorite tool in a wide variety of scenarios, such as in autonomous driving, industrial robotics, and video surveillance.

Generative Adversarial Networks (GANs) and Image Synthesis

GANs came to the picture with the introduction of the most improved computer scientist in the field of AI, Ian Goodfellow and his friends a few years back, and constitute a major step forward in the generative modeling area. GANs are duels between two networks: a generator, and a discriminator, working with each other over a game-theory-embellished network. The generator’s role is to produce the best possible images while the discriminator is to choose between the realistic and the image it believes to be synthetic. The more time passes, the more the generator becomes capable of making representations that are more and more lifelike, which results in impressive accomplishment in areas like image syntheses, style transfer, and super-resolution.

Transformers and Vision Transformers (ViTs)

Attention mechanisms, which were initially made for language modeling, have become one of the basics in Computer Vision models as well. Alexey Dosovitskiy and colleagues are the masterminds behind Vision Transformers (ViTs), which this time around took the architectural concept of the Transformer and tried it with the image material. The given image will be chopped into small patches which are acted upon by the model in the same way as tokens in a sentence.

By connecting every part of the image with the self-attention mechanism, the model can then resolve the connections and dependencies among the constituent bits of the expression. The various capacities of Vision Transformers have identified them as the topmost performance devices in different vision tasks, thus diminishing the place of CNNs as the dominating image processors.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us

Integrating Computer Vision Models into Real-world Applications

Autonomous Vehicles

Self-driven cars or Autonomous vehicles are one of the most interesting and complex applications of Computer Vision models. Self-driving cars employ Computer Vision models in a number of ways owing to its features such as object identification, lane identification, and avoiding hurdles. The vision system, which receives images from cameras installed on the vehicle, is capable of detecting other automobiles, people on the road, signs, and the state of the road in real time. These capabilities are important for the reliable functioning of autonomous cars of the future. Tesla, Waymo, and Uber are some of the companies that have embraced this technology at the moment, empowered with Computer Vision models used in self-driving cars.

Healthcare and Medical Imaging

Computer vision models can be of great benefit to the medical industry applied in imaging that leads to better diagnosis of ailments and treatment of patients. Imaging methodologies such as MRI, CT scan, and X-ray create an excessive volume of visual data that the computer vision models can analyze through the detection and diagnostics of various diseases, and even the prognosis of the outcome of a certain or particular disease.

For instance, Computer Vision models can easily detect tumors in medical images, and help radiologists win more accurate and faster diagnoses. In the same way, these models are being applied to simple and repetitive functions like screening and monitoring to allow doctors and other healthcare givers to attend to more complicated cases

Security and Surveillance

Security and surveillance systems have received tremendous improvement due to the integration of Computer Vision. Biometrics – Face recognition which is the process where an individual’s face is recognized from an image or video is now used in the security systems across the globe.

Other than recognition of the face, Computer Vision is used in areas such as detection of abnormality, and analysis of behavior, and can actually forecast security risks. However, applying Computer Vision in surveillance systems generates the key ethical issues related to privacy and consent. To some extent, it is critical to address the subject of security and liberty, or the ‘secure liberties,’ to ensure that the gains made in security do not infringe on an individual’s liberties.

Ethical Considerations and Bias in Computer Vision

Understanding Bias in Models

Unfair or inaccurate results – These are some of the things that come with Bias in Computer Vision models that are developed. Bias may be categorized in terms of when models are created using data sets that do not reflect how the world is in the current society, and end up performing poorly on specific groups’ data. For instance, while using facial recognition software, the system might not be able to identify the features of a black-skinned person efficiently. This section explains how bias gets into models in terms of data, algorithms, and even problem formulation and why it has to be eliminated to get society to trust AI systems.

Strategies to Mitigate Bias

There is always a way of minimizing bias in Computer Vision Models as given below:

- When carried out, one of them is the training of the machine using data that is representative of all the users. Potential solutions to cure the issue to some extent include data augmentation and the generation of synthetic data. Also, the functionality of the algorithms can be made such that bias is reduced during the training process.

- Another way is by conducting audits and checks on the models in use often, to assist in detecting and preventing bias in the long run.

Through these approaches, the developers are better placed to design more ethical and egalitarian Computer Vision models.

The Future of Computer Vision models

Emerging Trends and Innovations

Computer Vision models has a great future prospect, realizing possibilities in existent trends and radically new ideas. Pre-specified distributed computing is going to be the game changer in how Computer Vision is going to be delivered in real-time through edge computing. New opportunities to promote privacy and increase the efficiency of the processes are the possibilities of federated learning that works with the models trained across decentralized devices without the need to send data to the cloud. Additionally, the combination of AI with such technologies as AR and VR is expanding opportunities in such spheres as entertainment, education, and remote cooperation.

Potential Impact on Various Industries

Computer Vision is already set to disrupt industries in a way that is quite noticeable and broad and as the technology advances the changes will be even more notable. Computer Vision used in retail stores is very feasible, Retailers can include features such as visual search and automatic checking-out systems.

In manufacturing, it can make better control at the quality and the maintenance time by using the images of products and machines. Computer Vision is also in the entertainment industry Since deepfake and content generation improved by Computer Vision can provide a better entertainment experience. The opportunities for applying new ideas and changes are rather huge and Computer Vision should be considered as one of the main focuses on the future development of many fields.

Conclusion

Therefore, the history of Computer Vision from the era of simple pixel processing of images to modern advanced deep learning is truly inspiring. This field has not only provided a paradigm shift in the way machines and computers can see and comprehend Visual data but has also paved the way for new frontier technologies almost everywhere in the world starting from automobiles to healthcare systems and security systems.

Looking to the future, the future of Computer Vision technology is Wednesday promising: it will define new potential and change industries for the better. New horizons mean new opportunities – however, it is necessary to stay critical of applying these technologies, concerning the ethical aspects and issues connected with them.

Also Read:

Modern Computer Vision Models have evolved rapidly, beginning with practical foundations explained in Master OpenCV 101: Unlock the Power of Computer Vision, which introduces core image processing techniques. As capabilities expand, Object Detection Beyond Classification: Exploring Advanced Deep Learning Techniques and Mastering YOLO: Proven Steps to Train, Validate, Deploy, and Optimize demonstrate how real-time detection systems achieve higher accuracy and performance. Moving beyond detection, Unlocking the Future of Semantic Segmentation: Breakthrough Trends and Techniques highlights pixel-level scene understanding, while Vision Transformers: Unlocking New Potential in OCR – A Game-Changer in Text Recognition showcases the shift toward transformer-based architectures. Looking ahead, Generative Computer Vision: Powerful Foundation Models That Are Revolutionizing the Future captures how next-generation Computer Vision Models are redefining visual intelligence and automation.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us