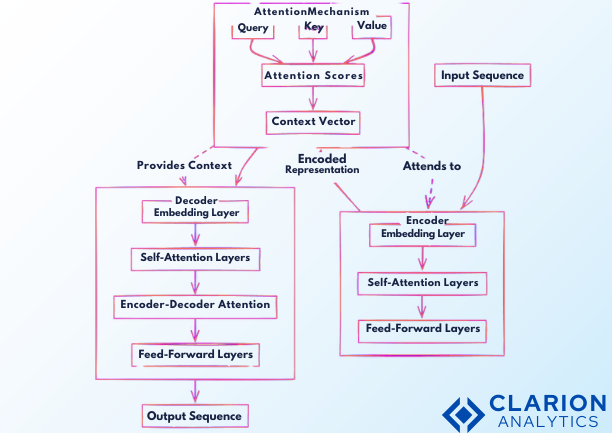

The Llama language model is an advanced, cutting-edge, and robust natural language processing (NLP) model which can generate text that is similar to that of a human. It is on large language models (LLMs) that belong to the category of language machines that change how text is produced and machines that are similar to humans. Llama, also known as “Language Model Made Accessible,” is established to be a transformer architecture that lets it do tasks such as text completion, translation, summarization, and more. It is not necessary to pre-train the Llama fine tuning to achieve a good performance across a wide range of tasks. This makes it different from the conventional paradigms that excessively require task-specific data and training. this blog you will learn more about llama fine-tuning.

-

Llama Fine-tuning

-

Llama Fine-tuning means optimizing the pre-trained model on a smaller dataset that is tailored to specific tasks. Thus, not only does this procedure add to the model’s overall accuracy, making it crucial, but also it enables it to comprehend proper nouns, jargon, or context that may be native to certain applications only and thus adds more to it. Synchronization with the existing Llama model is vital at the fine-tuning stage. As a result, the model’s general linguistic understanding is converted to the specifications of individual tasks.

Customizing Llama fine-tuning brings several advantages:

- Increased Performance: Through the fine-tuning process that involves specific task data, Llama can deliver increased accuracy and relevance in its output.

- Cost-Efficiency: Using fine-tuning instead of training a new model consumes less time and computational resources, thus cutting the cost of the solution for businesses.

- Adaptability: Llama fine-tuning, can be applied to various applications such as customer service chatbots and content generation without retraining the AI model.

- Domain Expertise: By fine-tuning the use of sector-specific information into the model, it is enabled to handle industrial-oriented jobs more effectively.

Preparing Your Data

-

Data Collection

Data collection is the backbone of any machine learning model building including Llama fine-tuning, . The data quality and relevancy directly determine the model’s performance. It is crucial to have a diverse and, as much as possible, a complete dataset that reflects the specific use case of Llama which is being fine-tuned.

Top-quality and relevant data are the guarantee for the fine-tuned model to understand the details and context of the specific task. For example, if Llama fine-tuning, is being fine-tuned to generate technical documentation, the dataset should contain a wide variety of technical documents. Bad quality or irrelevant data can result in a model that generates incorrect or incomprehensible outputs. Thus, the creation of the dataset should be well thought-out and carried out with great care. The dataset must be representative of the task and must be free of noise in the form of irrelevant information or errors lyrics.

-

Data Cleaning and Preprocessing

Before doing any Llama fine-tuning, , the collected data needs to be cleaned and preprocessed. This process makes sure that all the data is in the same format and has no errors that might influence the model’s performance in a negative way.

The following are a few examples of data cleaning and preprocessing:

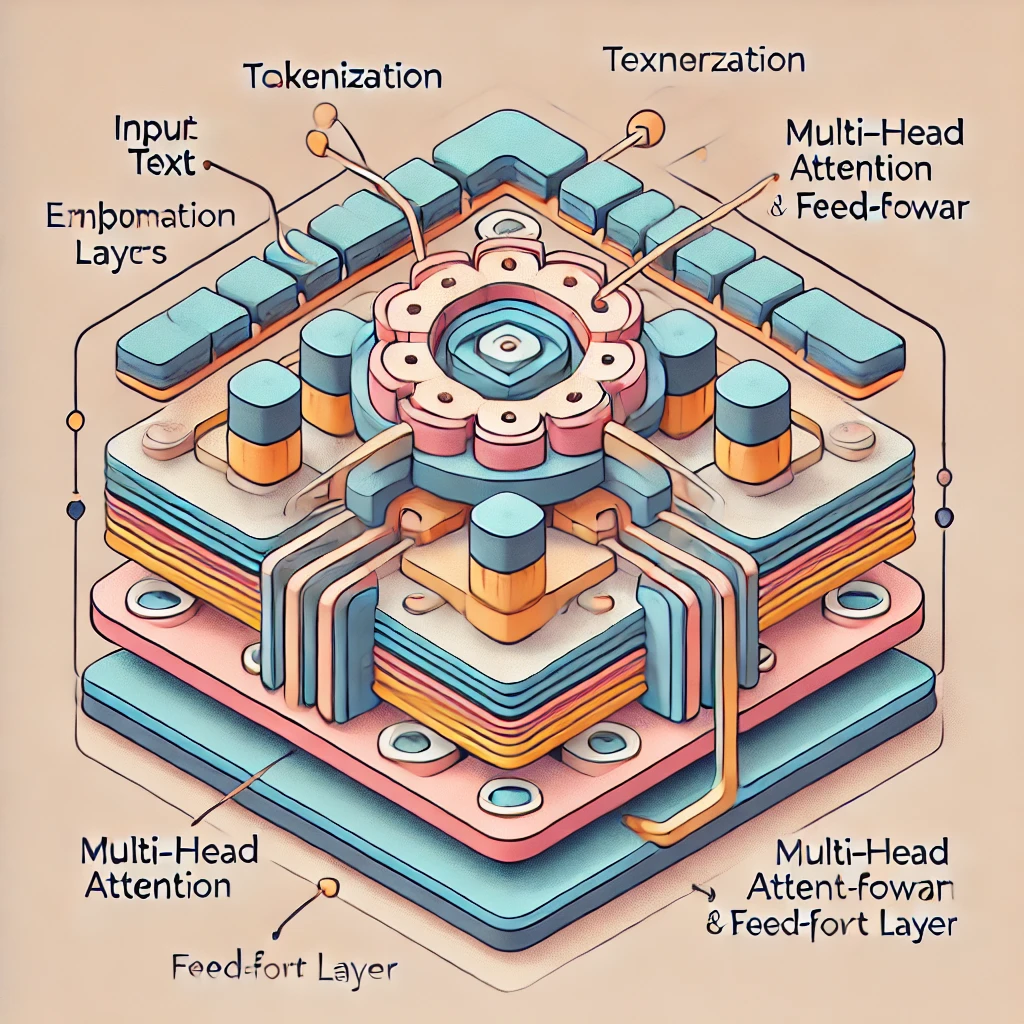

- Tokenization: In this step, the text is broken down into a small information set i.e. word or a sub-word which the model can further process. Correct tokenization means that the model interprets the input text properly.

- Normalization: This part is to make the text uniform i.e. to convert the text into a standard format, to get rid of the punctuations or to change the numbers into a uniform format. This will enable the model to differentiate factually relevant content from mere changes in language.

- Filtering: Filtering relates to the removal of irrelevant or unnecessary data from the dataset. This can include truncating stopwords, repetition of phrases, or non-contributory text. Removing such data assures the training of informative and accurate models only

-

Data Augmentation

Data augmentation is one of those techniques that creates more variety or size for better generalization capability of the model. This is of particular use when data comes in small sizes or is not varied.

Methods to increase the diversity and size of the data include:

- Synonym Replacement: Replacing some words with synonyms allows for the creation of variants of the same sentence without changing its meaning.

- Back-Translation: Translation of text into some other language and again into the original language to form paraphrased versions of data.

- Random Word Insertion or Removal: At any position, insert or remove random words to give the model some challenging examples in training about sentence structure.

- Noise Addition: This involves the addition of random noises to texts, such as the addition of a typo or slight grammatical errors to make the model robust for real-world variations in input.

Fine-Tuning Techniques

-

Transfer Learning

Transfer learning is a very effective method in which a model, pre-trained on one task, could easily adapt to another task for which much less data would be required. This approach takes advantage of the knowledge that the model has learned through pre-training.

Transfer learning works by taking a model that is pre-trained on a big dataset, such as Llama fine-tuning, , and subsequently fine-tuning it on a much smaller task-specific dataset. The pre-trained model will know most aspects of the general pattern that the language has, so fine-tuning can be done in a fraction of the data and computational time. Some of the benefits of transfer learning include but are not limited to the following:

- Reduced Training Time: Since the model has already learned about the basic structures of the language, fine-tuning is quicker in comparison to training from scratch.

- Improved Performance: It uses a pre-trained model that can normally provide better results for the new task.

- Efficiency: This process of transfer learning will enable a wide range of advanced models, including Llama, for adaptations in very niche tasks where large amounts of data are not available.

-

Llama fine-Tuning Strategies

This is the optimization process for hyper-parameters, which control the Llama fine-tuning learning process. Proper tuning of them may cause a considerable impact on model performance. These include:

- Learning rate: A hyperparameter that specifies the speed at which the parameters of a model are updated. This should not be too high, else it will lead to very fast convergence toward a bad solution; if it is too low, training might take too long and overfit.

- Batch Size: The number of training examples in one iteration. Larger sizes for batches tend to make updates more stable but require a lot of memory, while smaller ones introduce noise into the optimization procedure—probably well-suited to generalization.

- Early stopping: A technique that provides an obvious criterion to stop training when model performance on the validation set begins to degrade, thus preventing overfitting. Grid Search and Random Search: Techniques of systematic or random investigation of hyperparameter combinations in search of the optimal settings for fine-tuning.

-

Hyperparameter Tuning

Hyperparameter tuning is the optimization process for hyper-parameters, which control the Llama fine-tuning learning process. Proper tuning of them may cause a considerable impact on model performance. Examples of hyperparameter tuning are:

- Learning rate: A hyperparameter that specifies the speed at which the parameters of a model are updated. This should not be too high, else it will lead to very fast convergence toward a bad solution; if it is too low, training might take too long and overfit.

- Batch Size: The number of training examples in one iteration. Larger sizes for batches tend to make updates more stable but require a lot of memory, while smaller ones introduce noise into the optimization procedure—probably well-suited to generalization.

- Early stopping: A technique that provides an obvious criterion to stop training when model performance on the validation set begins to degrade, thus preventing overfitting. Grid Search and Random Search: Techniques of systematic or random investigation of hyperparameter combinations in search of the optimal settings for fine-tuning.

Evaluation Metrics

-

Quantitative Metrics

It is important that the Llama fine-tuning model is objectively contrasted with several quantitative performance metrics. These include:

- Perplexity: The measures quantify how well the model manages to predict one word. Lower perplexity is better.

- Accuracy: That is, the percentage of the right value predicted by the model. It’s a very simple metric for tasks in classification kinds.

- F1-Score: It is the harmonic mean of precision and recall; thus, it shows a balance between them when giving performance for something like information retrieval.

- BLEU: It is a metric that computes the quality of generated machine text, particularly in translation, as compared to that of a reference text. ROUGE: Recall-Oriented Understudy for Gisting Evaluation-it’s a method for ensuring the check of quality summaries produced by comparing generated and reference summaries on common n-grams.

-

Qualitative Evaluation

Quantitative measures alone are not expressive enough to capture performance on tasks that are either creative or human-interaction dependent. Much more subjectively, human judgments involve features about fluency, coherence, and relevance. This becomes particularly important in creative applications like content creation or AI for customer service, where quality of interaction may be more important than pure accuracy. The major significance of providing human feedback is to build into the model the specification details given by an end user.

Real-World Applications

-

Case Study 1: Customer Service Chatbots

Customer service support chatbots are on the rise to handle customer queries. In this case, Llama fine-tuning, will give the model a sense of the particular language, tone, and context of the conversation related to customer service, and therefore it will be able to craft fittingly helpful responses.

So, Llama fine-tuning, for customer service chatbots, you need a dataset of customer service interactions that includes common questions, issues, and responses, along with cleaning and pre-processing. Then the model can be fine-tuned on such data in learning answers to various kinds of queries. By including domain-specific languages and industry jargon in the training data, different versions of Llama can be configured according to the specific business needs so that chatbot answers are more precise, given the particular context. Besides this, it has the ability to train the model for different sentiments of a customer and act accordingly for betterment in the overall user experience.

-

Case Study 2: Content Generation

Llama can be fine-tuned to write any material: articles, code, and creative writing. It could be extremely helpful for the automation of writing that is repetitive or for improving a creative process.

Llama can be fine-tuned to generate content based on a dataset relevant to the desired output of high-quality examples. Example:

- Article Writing: Through Llama fine-tuning, on such datasets comprising high-quality and target-specific articles in target audiences like technology, health, or finance, one could create models for generating fluent and informative pieces targeting these target audiences.

- Code Generation: More than learning from code snippets and programming tutorials separately, Llama could be very useful when it comes to code generation, offering suggestions, or even partially auto completing code.

- Creative Content: Training creative texts, including stories and poems, apart from marketing text material, which shall enable the Llama to generate text creatively in line with the style and tone desired.

-

Case Study 3: Summarization

Summarizing is the larger application of Llama fine-tuning, . It summarizes long passages of text or condenses them into small bits, so it is much easier to take information from voluminous documents.

Summarization for Llama fine-tuning, usually involves training paired data, including long documents and the summaries associated with them. Important information, basically, learned from this dataset, is reproduced in short form summarily. This could be highly beneficial for applications like summarizing research papers, legal documents, news articles, and more. The fine-tuning of the Llama fine-tuning, on domain content actually tunes the power of the model to reveal the text with important points while having coherence and accuracy.

Challenges and Best Practices

-

Overfitting

Overfitting is where a model performs well on the training data but fails to generalize to new, unseen data. One of the great challenges for fine-tuning, indeed, when one is dealing with smaller datasets. What all to keep in mind with Llama fine-tuning in order to prevent overfitting:

- Use a Validation Set: Periodically evaluate the performance of the model on a held-out validation set to see whether it generalizes well, to provide early warnings of overfitting.

- Early Stopping: Early stopping is done based on the effect of the model degrading on the validation set by stopping the training process.

- Regularization Techniques: Dropout and Weight decay are regularization techniques used to penalize overly complicated models in favor of simpler solutions that hence have better generalizability.

- Data Augmentation: This is explained above, adding the amount of training data means adding examples that can be fed to the model so it wouldn’t be overfitting.

-

Bias and Fairness

AI systems that produce biased results that reflect and perpetuate human biases within a society, including historical and current social inequality. Biases in language models could also result in possible unfair or discriminatory outputs. This is a serious issue, mostly when deploying models into sensitive applications.

There are several ways to reduce biases in Llama fine-tuning, :

- Diversification of Training Data: The content, perspective, and context enclosed in the training data is diversified to reduce the probabilities of inducing bias.

- Bias Detection and Correction: The detection of biased outputs introduces different techniques, for example, adversarial testing or algorithms that try to reduce bias.

- Explainability and Accountability: The model must tell the end user about its limitations and the possible biases it can fall into. This requires periodical updating with new biases, which may arise. Human-in-the-Loop: Include human judgment in key decision-making levels where the question of bias may arise and thus allow human intervention in cases of biased outputs.

-

Ethical Considerations

For big language models, such as Llama fine-tuning, , the basic considerations of ethical output use are established and spill over into society.

Ethical considerations include:

- The Abuse of Technology: Llama fine-tuning, can be another vehicle for generating mischievous information, deep fakes, or unpalatable material. Developers have to implement checks and balances against such misuse.

- Privacy: Training the model on sensitive or proprietary data gives rise to many privacy concerns. Make sure to sanitize the data and comply with data protection orders. Automation by language models is said to displace jobs in certain areas. There is a need to consider wider, societally relevant consequences and take measures for softening their harmful effects, including re-skilling workers or opening up new AI-related job opportunities.

-

Future Outlook

With rapid developments in NLP, the language models first amaze and are exemplified by the front runner Llama fine-tuning, . As these models gain more power and sophistication, their uses will drive other new avenues for creativity in industries around the world.

In the future, developments with Llama fine-tuning, and related models will include:

- Larger, more powerful models: As research into both model architecture and training techniques continues, even larger language models will be built with precision and expressiveness in understanding and the generation of text.

- Cross-Lingual and Multimodal Capabilities: Llama’s future versions are cross-lingual, meaning the model understands and generates text across many languages, while adding modalities such as text, images, and audio.

- Fine-tuned AI Assistants: Through better and better fine-tuning, it is easy to see ultra-personalized AI assistants that could be fine-tuned to perform better toward user preferences and needs.

- Ethical AI Development: Increased awareness of the ethical challenges posed by language models within the AI community will ensure that, in the future, a more significant emphasis on the development of fair, transparent, and accountable AI systems. Informed understanding and active participation in such work afford developers and researchers the opportunity to push ahead with their efforts in developing models like Llama fine-tuning, , ensuring that application is in service to society.

Conclusion

In this blog, we show Llama fine-tuning, language model: from preparing ground truth data to selecting the right fine-tuning techniques and model performance estimation. Also discussed were customer-service chatbots, benefits created for content creation, summarization, and other tasks by customizing Llama. We looked at some challenges – overfitting, bias, and some ethical considerations – and what to do in order to overcome these.\

Fine-tuning involves the process of taking a pre-trained model and making it perform well on a particular task using datasets that are very small and specialized. This involves the gathering, cleaning, and augmentation of data, fine-tuning strategy, and choice of hyperparameters. Now, to ensure that this model is developed in consonance with all requirements and tested against both qualitative and quantitative metrics, one has to be prepared to be confronted with overfitting and bias challenges. These have ethical implications when responsibly deploying Llama fine-tuning.

Intrigued by the possibilities of AI? Let’s chat! We’d love to answer your questions and show you how AI can transform your industry. Contact Us